FastAPI framework, high performance, easy to learn, fast to code, ready for production. 🚀 Web APIs with Python type hints. 🐍 By @tiangolo 🤓

Internet

Joined September 2020

- Tweets 840

- Following 1

- Followers 39,744

- Likes 4,493

Here's a smol bug-fix release for the weekend, FastAPI 0.121.1 🐛

luzzodev just fixed Depends(func, scope="function") when used in the router, at the top level. 😎

github.com/fastapi/fastapi

Here's FastAPI 0.121.0 🍰

In case you needed to exit early from dependencies with yield, you can now opt in with:

Depends(some_func, scope="function")

fastapi.tiangolo.com/tutoria…

Here's FastAPI 0.120.2 ☕️

Fixing a bug when generating schemas for multiple nested models 🐛

github.com/fastapi/fastapi

fastapi-cli 0.0.14 comes with support for Python 3.14 🐍

Just install "fastapi[standard]" 🤓

fastapi.tiangolo.com

Quick fix release, 0.119.1 🍫

This fixes compatibility (warnings) with @pydantic 2.12.1 on Python 3.14 🐍

Nothing changed for the final users, but still a lot of work underneath to get it done. 😅 Thanks @OxyKodit! 🙌

github.com/fastapi/fastapi

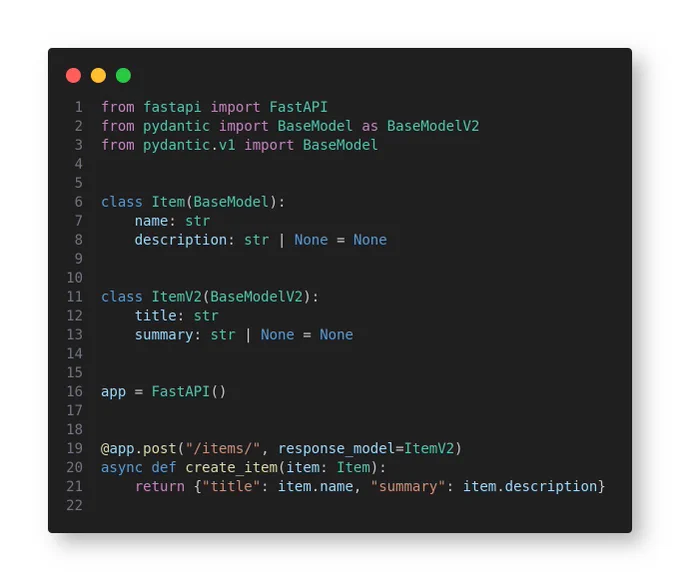

Here's FastAPI 0.119.0 🚀

With support for both @pydantic v2 and v1 on the same app, at the same time 🤯

This is just so you can migrate to Pydantic v2 if you haven't done it yet, here's your (last) chance! 🤓

Pydantic v1 is now deprecated ⛔️

Read more fastapi.tiangolo.com/how-to/…

Here's FastAPI 0.118.1, with compatibility for the latest @pydantic. 🚀

This one had the combined effort of the community, the @pydantic team, and the @FastAPI team, all together. 🙌

github.com/fastapi/fastapi

FastAPI retweeted

In your @FastAPI apps, are you using @pydantic version 1 or 2?

If v1, why haven't you upgraded to v2? 🤔

I wanna make sure everyone upgraded or can upgrade so that I can remove support for v1.

76%

Pydantic v2

6%

Pydantic v1

18%

Pydantic 🍿

877 votes • Final results

76%

Pydantic v2

6%

Pydantic v1

18%

Pydantic 🍿

877 votes • Final results

FastAPI 0.118.0 was just released 🎁

🐛 This fixes a bug when using a StreamingResponse and dependencies with yield or UploadFile.

Details: Now the exit code of dependencies with yield runs after the response is sent back.

If you want to read more: fastapi.tiangolo.com/advance…

What's a better weekend treat than a release packed with features and bug fixes? 🍰

FastAPI 0.117.0 has too many things to list. ✨

Check the release notes: fastapi.tiangolo.com/release…

Shoutout to YuriiMotov for the work reviewing and tweaking PRs! 🙌

Just released, fastapi-cli version 0.0.12 🤓

With support for the PORT environment variable, used by many cloud providers. 🚀

Just use:

✨ fastapi run ✨

Thanks @BurroMarco! 🙌

Here's FastAPI 0.116.2 with support for the latest Starlette 🚀

github.com/fastapi/fastapi

FastAPI retweeted

Universal deep research which wraps around any LLM by Nvidia

What is more important!? It uses @FastAPI 🥳

💻 NVIDIA introduces Universal Deep Research, a system that lets users plug in any LLM and run custom research strategies as code.

This breaks from fixed deep research products by handing over control of model choice, source rules, verification, and spend.

A natural language strategy is compiled into 1 generator function that yields structured progress updates while calling search and the model.

Intermediate facts live in named variables rather than the prompt, so full runs fit in about 8K tokens and remain traceable.

Tools are called synchronously for deterministic behavior, and the model acts as a local helper for summarizing, ranking, or extracting.

Control flow runs on CPU while targeted model calls touch small text slices, which lowers GPU usage, latency, and cost.

Reliability improves because generated code mirrors each step with explicit comments, which reduces skipped steps and surprise constraints.

Execution is sandboxed to block host access, keeping strategies confined to a safe runtime.

The demo UI lets users edit strategies and use minimal, expansive, and intensive templates from single pass to iterative loops.

Limits include dependence on the model's code generation, the quality of the user's plan, and little mid run steering beyond stop.

For teams this means bring your own model and method, so best models pair with domain playbooks without vendor lock.

The view here is that the code driven control with scoped model calls makes deep research fast, cheap, and auditable.

A rich strategy library will drive adoption, because most users will not author complex plans.

----

Paper – arxiv. org/abs/2509.00244

Paper Title: "Universal Deep Research: Bring Your Own Model and Strategy"

FastAPI retweeted

I was just checking, and @FastAPI has been downloaded 4,297,849 times... in the last day.

4M+ times a day. 🚀

That also means that, since you started reading this post, it has been downloaded around 400 times. 🤯

pypistats.org/packages/fasta…

polar is open source, and built with open source technology to a large degree

the polar open source stack™ — would never have been possible without...

python backend

> @FastAPI by @tiangolo

> Starlette, HTTPX & Uvicorn

> Dramatiq

> @pydantic

> @sqlalchemy

> Authlib by @lepture

> APScheduler

> React Email by @resend

frontend / cli

> @nextjs

> @shadcn

> @tailwindcss

> Motion by @mattgperry

> React Query by @tannerlinsley

> @nuqs47ng

> React Hook Form

> @EffectTS_ by @EffectfulTech (cli under development)