Machine learning and Data science researcher focusing on computer vision...

Joined July 2014

- Tweets 952

- Following 524

- Followers 757

- Likes 5,493

Jirka⚡ retweeted

Pre-training an LLM in 9 days

A recipe to pre-train models in 9 days, to become comparable AI assistants to the likes of Apple OpenELM and Microsoft Phi.

This repo contains the model architecture, training scripts, and utilities of 1.5-Pints and 0.12-Pint

🔑 Architecture: Transformer-based, 1.5-Pints (2B params), 0.12-Pint (120M params)

🔬 Data: Expository Prose V1, emphasizes quality over quantity

🚀 Training: 9 days, uses flash attention and xformers

📊 Performance: Comparable to larger models like Apple OpenELM, Microsoft Phi

🛠️ Implementation:

- PyTorch Lightning for distributed training

- Finetuning and DPO scripts

- HuggingFace conversion

- Efficient techniques: flash attention, xformers

- Configurable architecture

- Comprehensive testing suite

Jirka⚡ retweeted

Ready to build a “Chat with your code” application with @ollama, Weaviate, and @llama_index on @LightningAI Studio?

We’ve just published a Lightning AI Studio template that you can simply copy. You’ll be up and running with your Retrieval-Augmented Generation (RAG) pipeline in minutes.

Jump right in here: lightning.ai/weaviate/studio…

Jirka⚡ retweeted

Dropped today - fresh FSDP implementation in Thunder - in only 500 lines 🤯🤯

You know a compiler is powerful when FSDP can be implemented in a single file in < 500 lines 😉😉

github.com/Lightning-AI/ligh…

Jirka⚡ retweeted

Apple's 3B LLM Outperforms GPT-4 🤯

📌 They also found that the performance of ReaLM and GPT-4 are very similar for the unseen domain.

📌 ReALM significantly improves how conversational assistants like Siri or Alexa can understand the way humans naturally talk. Imagine you're looking at a list of restaurants on your smartphone and you say "direct me to the one on Main Street" - ReALM is able to understand which restaurant you're referring to, even though you didn't specify the exact name.

📌 It achieves this by cleverly converting the visual layout of what's on your screen into a text format that a powerful language-understanding AI model can reason about. This allows ReALM to resolve ambiguous references (like "it", "that one", "the second item") in a way similar to how humans intuitively grasp context.

📌 ReALM outperformed prior approaches on this task and nearly matched GPT-4 model while being much more efficient, making it practical to implement on devices like smartphones.

📌 So now AI assistants can converse more naturally, enabling interactions like "book a table at the Italian place I just looked at" without having to tediously spell everything out.

Jirka⚡ retweeted

Meet Thunder, the new compiler for PyTorch!

Make PyTorch models up to 40% faster (it's still early days 😳)

forbes.com/sites/craigsmith/…

Jirka⚡ retweeted

Really excited for the Thunder Compiler for @PyTorch presented by @lantiga and @ThomasViehmann in [S62544] at #GTC24! Join the session if you are around :) nvidia.com/gtc/session-catal…

Jirka⚡ retweeted

Excited to announce our new compiler - Thunder!

(built in collaboration with NVIDIA). 🤯 🤯

Thunder is a source to source compiler for PyTorch. It speeds up PyTorch models.

As an example, it speeds up llama 2 7B by 40%.

github.com/Lightning-AI/ligh…

Jirka⚡ retweeted

I have a lot to speak here. Previously we developed our own tool on top of torch, it was a bit heavy and over complicated.

Starting from jina-embeddings-v1 to v2, we completely switched to Lightning Fabric (tough decision to drop the old): lightning.ai/docs/fabric/sta…, we literally have 2.5 ppl working on it for 3 days and get our first pipeline running.

Now we have much more complicated data loading, loss function etc, @LightningAI Fabric has been working great for us.

is @LightningAI still the best way to train a pytorch model without all the extra cruff?

I completed Inspiring and Motivating Individuals! Check out my certificate coursera.org/share/e8e30d46c… #Coursera

Jirka⚡ retweeted

I've read a ton of research papers this year. And to conclude this eventful year in AI, I've compiled a selection of 10 noteworthy papers from 2023 that I am discussing in my new article: magazine.sebastianraschka.co…

To get a sneak peak, I'm covering:

- insights into LLM training runs

- new openly available LLMs

- efficient finetuning methods

- improving "small" LLMs

- pretraining on domain-specific data

- new techniques to align LLMs with human preferences

- enhancing LLMs with high-quality data and instruction sets

- ConvNet vs vision transformer comparisons

- recent developments in image segmentation

- video synthesis with latent diffusion models

I'm wishing you all a great start to 2024!

Jirka⚡ retweeted

All-in-one. Zero setup. It’s finally here - Lightning AI Studios

Launch a free Studio - lightning.ai/

Jirka⚡ retweeted

Introducing Lightning AI Studios - A persistent GPU cloud environment. Setup once. Ready any time.

Code online. Code from your local IDE. Prototype. Train. Serve. Multi-node. All from the same place.

No credit card. 6 Free GPU hours/month.

lightning.ai

Moved up to rank 32 on #kaggle. I'm not addicted. I can quit when I want. kaggle.com/competitions/UBC-…

Jirka⚡ retweeted

Lightning 2.1 is here!

⚡Enhanced efficiency for training large models with FSDP

⚡Seamless scaling without substantial code changes

⚡Faster speeds using bitsandbytes advanced precision plugins

See all the updates ➡️ lightning.ai/pages/community…

#GenAI #MachineLearning #LLMs

Jirka⚡ retweeted

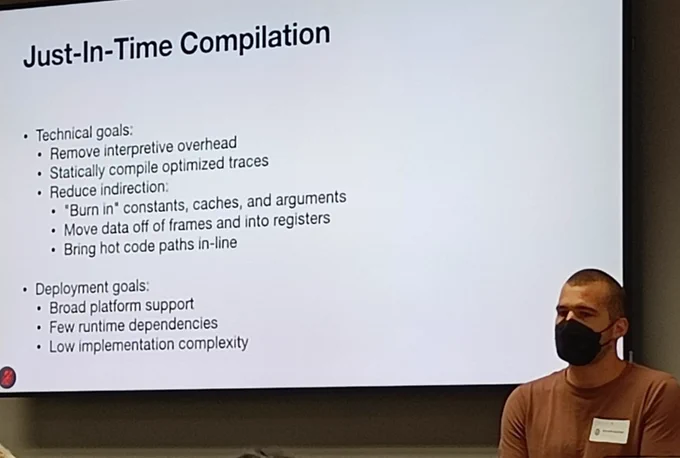

Brandt Bucher proposes a "Copy-and-Patch" JIT compiler based on LLVM for Python 3.13. Machine code is generated offline, copy+patch at runtime to inject arguments. Windows, Linux, macOS support on x86-64 and ARM64 (Python Tier-1 and Tier-2), more platforms supported later.

I just published "Scalable Automation for Retry/Rerun Failed Checks in #GitHub Actions" #DevOps link.medium.com/jLf7yxcaoDb

Jirka⚡ retweeted

Tomorrow, @Tesla will turn on a massive and very expensive 10,000 unit NVIDIA H100 GPU cluster to help it train FSD. But that got me wondering, what is the difference between these new H100 GPUs and the older A100 graphics processing units (GPUs) Tesla has been using for the last couple years? I briefly break it down below.

NVIDIA A100:

This GPU launched in 3 years ago in late-2020. It introduced a 20x performance improvement over the previous generation. The A100 is designed for high-performance computing and artificial intelligence (AI) workloads:

• 6,912 CUDA cores

• 432 tensor cores

• 40 GB or 80 GB of high-bandwidth memory (HBM2)

NVIDIA H100:

This ~$40k GPU launched in late 2022. Up to 30x faster than A100, and is up to 9x faster for AI training.

The H100 is designed for graphics-intensive workloads such as video training (FSD videos), and is easy to scale up:

• 18,432 CUDA cores

• 640 tensor cores

• 80 streaming multiprocessors (SMs)

• Higher energy usage than A100

With the H100, high performance computing is over 5x faster compared to A100.

These new H100 GPUs will enable Tesla to train FSD faster and better than ever, but NVIDIA can't keep up with GPU demand. As a result, Tesla is spending $1 billion+ to build its own supercomputer named Dojo. It uses the company's hyper optimized custom designed chip. Tesla is MUCH more than just a car company.

This supercomputer will also train Tesla's fleet of vehicles and process data from them. @elonmusk said last month: “Frankly...if they (NVIDIA) could deliver us enough GPUs, we might not need Dojo.”

Tesla is bringing online its NVIDIA H100 GPU cluster at the same time it's activating Dojo. This will dramatically increase Tesla's compute capabilities to a level that no other automaker could dream of right now. Take a look below at Tesla's internal forecast for the compute power of Dojo. Brace yourselves, everyone.

Tesla's FSD V12 end to end training is compute bottlenecked, but the company is taking active measures to ensure that it won't be in the future. According to Elon, Tesla will spend over $2B in 2023 alone on training compute, and will do so again in 2024.

Buckle up everyone, the acceleration of progress is about to get nutty!

Great swag from @LightningAI to core contributors of #torchmetrics 💜

Jirka⚡ retweeted

This is huge: Llama-v2 is open source, with a license that authorizes commercial use!

This is going to change the landscape of the LLM market.

Llama-v2 is available on Microsoft Azure and will be available on AWS, Hugging Face and other providers

Pretrained and fine-tuned models are available with 7B, 13B and 70B parameters.

Llama-2 website: ai.meta.com/llama/

Llama-2 paper: ai.meta.com/research/publica…

A number of personalities from industry and academia have endorsed our open source approach: about.fb.com/news/2023/07/ll…