AI Developer Experience @GoogleDeepMind | prev: Tech Lead at @huggingface, AWS ML Hero 🤗 Sharing my own views and AI News 🧑🏻💻 philschmid.de

Nürnberg

Joined June 2019

- Tweets 5,176

- Following 1,076

- Followers 49,130

- Likes 6,895

TIL: Claude Code local sandbox environment is open-source.

> native OS sandboxing primitives (sandbox-exec on macOS, bubblewrap on Linux) and proxy-based network filtering. It can be used to sandbox the behaviour of agents, local MCP servers, bash commands and arbitrary processes.

Good read for the weekend :)

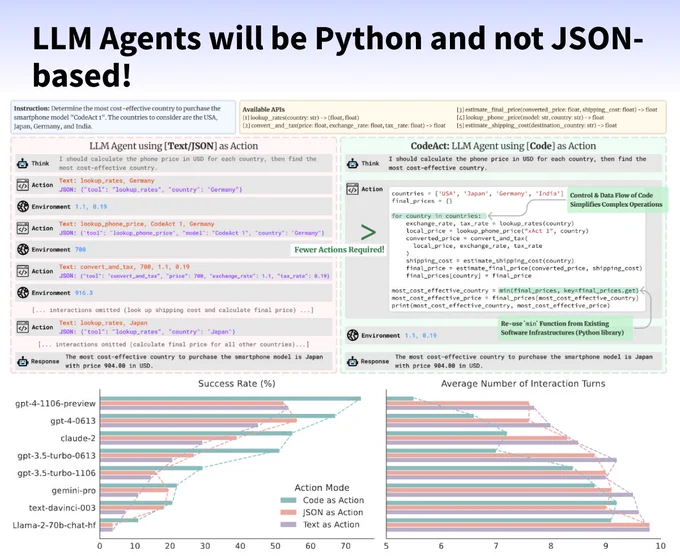

The next LLM Agents will be Python and not JSON-based! 🐍 CodeAct proposes a new framework to generate executable Python code instead of JSON for more challenging controll flows. It is not only more accurate but also reduces the number of actions for complex tasks. 🤯

LLM agents are typically prompted to produce actions by generating JSON, which limits and restricts flexibility (e.g., using multiple tools or loops). CodeAct uses executable Python code to consolidate LLM agents' actions into a unified “action space”. 💡

Implementation

1️⃣ Provide a system prompt that explains the CodeAct framework, including a few shot examples and the tools (Python function signature or docstring).

2️⃣ User prompts LLM with request or task

3️⃣ LLM generates a Python code as the action, based on the provided “tools”

4️⃣ Execute the code with a Python interpreter. (be cautious here)

5️⃣ Captures the output or error messages and provides this information to the LLM

6️⃣ LLM then decides to modify its previous code or generate the response for the user

Steps 3-6 are repeated until the LLM decides to submit a final response or reaches a predefined number of interactions.

Insights

🚀 Outperforms existing method with up to 20% higher success rates

⚡ Requires up to 30% fewer actions to solve tasks

🤖 GPT-3.5 CodeAct matches GPT-4 JSON performance

🛠️ Use of error messages allows self-debugging and improves performance

📚 Created CodeActInstruct dataset, consisting of 7,000+ multi-turn interactions

💬 Including datasets like OpenOrca and ShareGPT to keep general language understanding

🧩 Use of Python code allows complex control flows with loops or conditions

Paper: huggingface.co/papers/2402.0…

Dataset: huggingface.co/datasets/xing…

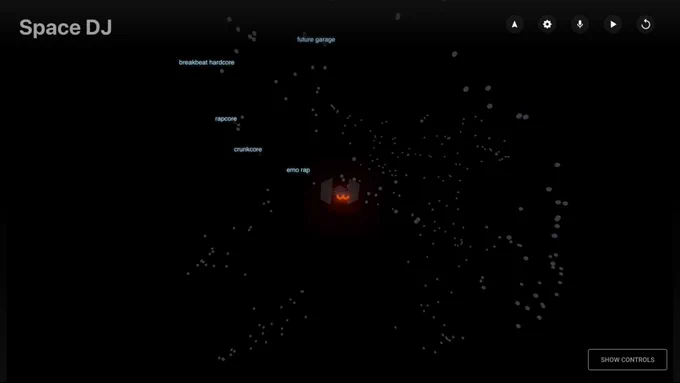

This so cool! Build with @GoogleAIStudio! Test it here: spacedj-363947264390.us-west…

We built a web app that lets you fly a spaceship through a 3D constellation of music - powered by our Lyria RealTime model. 🎶

Space DJ is an interactive visualization where every star represents a different music genre. As you explore, your path is translated into prompts for the API, creating a continuously evolving soundtrack. ↓

JavaScript guide including how to:

- Create a File Search Store

- Find a Store by Display Name

- Upload Multiple Files Concurrently

- Advanced Upload: Chunking & Metadata

- Run a Standard Generation Query (RAG)

- Find a Specific Document

- Delete a Document

- Update a Document

- Cleanup: Delete the File Search Store

philschmid.de/gemini-file-se…

The only Gemini API Javascript file guide you need. philschmid.de/gemini-file-se…

Yesterday, we shipped the File Search Tool, a fully managed RAG system integrated into the Gemini API. Excited to share the everything you need to know as a Web (JavaScript) Developer, covering creating and finding file search stores, concurrent and advanced uploads techniques, and how to update, find, or delete documents.

- Create persistent File Search Stores and find them by display name.

- Upload multiple files concurrently with custom chunking and metadata.

- Run standard RAG generation queries and find specific indexed documents.

- Update, delete, and cleanup documents and File Search Stores.

Link to guide below :)

We've launched the File Search Tool, a fully managed RAG system integrated into the Gemini API that simplifies grounding models with your private data to deliver more accurate, verifiable responses.

- $0.15/m tokens for indexing, free storage and embedding generation at query time.

- Free tier storage 1 GB, tier 1: 10 GB: Tier 2: 100 GB; Tier 3: 1 TB

- Vector search powered by the Gemini Embedding model.

- Supports PDF, DOCX, TXT, JSON, and programming language files.

- Includes citations specifying exact source documents used.

- Combines results from parallel queries in under 2 seconds.

Documentation: ai.google.dev/gemini-api/doc…

Script: github.com/philschmid/gemini…

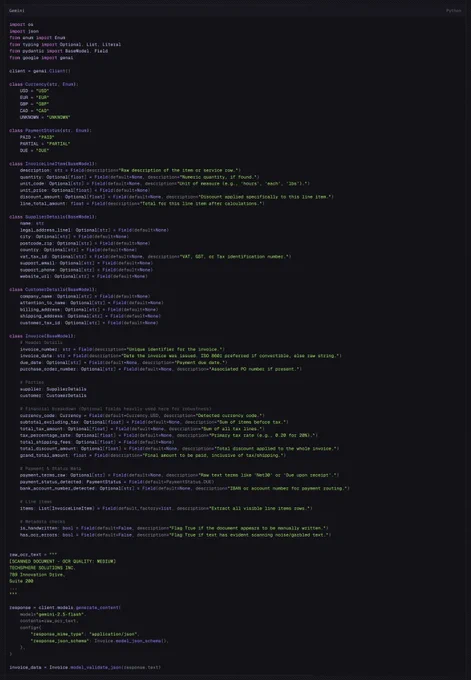

Here is an example of using a deeply nested Pydantic Schema with +30 fields, Union, Optionals and recursive structures with the new Sturctured Structured Outputs in the Gemini API! 💥

Note: To enable this you need to use the new `response_json_schema` parameter instead of the old `response_schema`. Documentation and Snippet below.

Enhancements to Structured Outputs in the Gemini API, expanding JSON Schema support for more predictable results, Unions and Recusrive Schema.

- Supports `anyOf` for unions and `$ref` for recursive schemas.

- Compatible out-of-the-box with Pydantic and Zod libraries.

- Preserves output property ordering to match input schemas.

- Includes minimum and maximum, prefixItems and more.

- Streams partial JSON chunks for faster processing.

We have multiple examples for Unions or recursive schema as part of our new documentation. 📕

QoL Update! 🔥 You can now prefill prompts via URL parameter (`prompt=`) in @GoogleAIStudio for Gemini!

Append `?prompt=Your Text` to ai(.)studio/prompts/new_chat

Example →

ai(.)studio/prompts/new_chat?prompt=Write a Haiku about flowers

Boom ready to run! 💥

Github Repository: github.com/philschmid/gemini…

Note: Thats currently a side project of me. Should we make an official one?

Introducing the Gemini Docs MCP Server, a local STDIO server for searching and retrieving Google Gemini API documentation. This should help you build with latest SDKs and model versions. 🚀

- Run the server directly via uvx without explicit installation.

- Performs full-text search across all Gemini documentation pages locally.

- Passed 114/117 for Python and Typescript using latest SDKs and Model.

- 3 Tools: search_documentation, get_capability_page, get_current_model.

- Utilizes a local SQLite database with FTS5 for efficient querying.

- Works with @code, @cursor_ai, Gemini CLI … and every other tool support MCP.

Read the full analysis in this week's issue. 👇

philschmid.de/cloud-attentio…

Last week in AI:

- OpenAI restructured into a Public Benefit Corp with a $1.4T compute roadmap.

- Poolside raised 1B at 12B valuation and Eric Zelikman secured $1B for a new venture.

- Gemini API prices slashed: 50% off Batch API and 90% off context caching.

- Google AI Pro plans (with Gemini 2.5) are rolling out via Jio in India.

- Moonshot AI shipped Kimi Linear, a hybrid model with 6x decoding throughput.

- Hugging Face released the 200+ page "Smol Training Playbook" for LLMs.

- vLLM introduced "Sleep Mode" for instant, zero-reload model switching.

- New findings suggest switching from BF16 to FP16 reduces RL fine-tuning divergence.

- Epoch AI analysis shows open-weight models now catch up to closed SOTA in ~3.5 months.