AI Developer Experience @GoogleDeepMind | prev: Tech Lead at @huggingface, AWS ML Hero 🤗 Sharing my own views and AI News 🧑🏻💻 philschmid.de

Nürnberg

Joined June 2019

- Tweets 5,176

- Following 1,076

- Followers 49,135

- Likes 6,895

TIL: Claude Code local sandbox environment is open-source.

> native OS sandboxing primitives (sandbox-exec on macOS, bubblewrap on Linux) and proxy-based network filtering. It can be used to sandbox the behaviour of agents, local MCP servers, bash commands and arbitrary processes.

Yesterday, we shipped the File Search Tool, a fully managed RAG system integrated into the Gemini API. Excited to share the everything you need to know as a Web (JavaScript) Developer, covering creating and finding file search stores, concurrent and advanced uploads techniques, and how to update, find, or delete documents.

- Create persistent File Search Stores and find them by display name.

- Upload multiple files concurrently with custom chunking and metadata.

- Run standard RAG generation queries and find specific indexed documents.

- Update, delete, and cleanup documents and File Search Stores.

Link to guide below :)

We've launched the File Search Tool, a fully managed RAG system integrated into the Gemini API that simplifies grounding models with your private data to deliver more accurate, verifiable responses.

- $0.15/m tokens for indexing, free storage and embedding generation at query time.

- Free tier storage 1 GB, tier 1: 10 GB: Tier 2: 100 GB; Tier 3: 1 TB

- Vector search powered by the Gemini Embedding model.

- Supports PDF, DOCX, TXT, JSON, and programming language files.

- Includes citations specifying exact source documents used.

- Combines results from parallel queries in under 2 seconds.

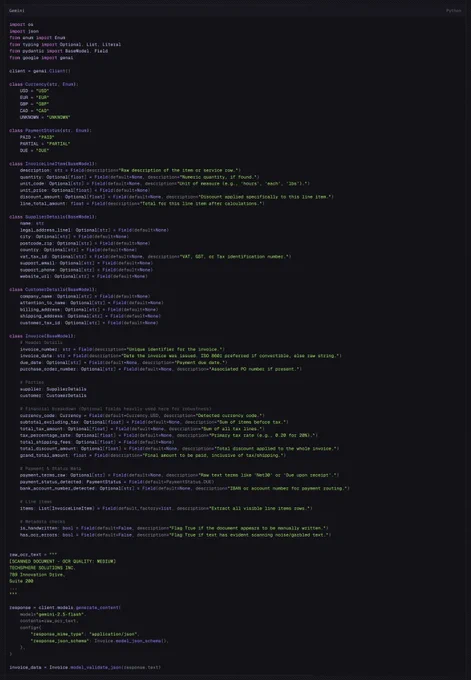

Here is an example of using a deeply nested Pydantic Schema with +30 fields, Union, Optionals and recursive structures with the new Sturctured Structured Outputs in the Gemini API! 💥

Note: To enable this you need to use the new `response_json_schema` parameter instead of the old `response_schema`. Documentation and Snippet below.

Enhancements to Structured Outputs in the Gemini API, expanding JSON Schema support for more predictable results, Unions and Recusrive Schema.

- Supports `anyOf` for unions and `$ref` for recursive schemas.

- Compatible out-of-the-box with Pydantic and Zod libraries.

- Preserves output property ordering to match input schemas.

- Includes minimum and maximum, prefixItems and more.

- Streams partial JSON chunks for faster processing.

We have multiple examples for Unions or recursive schema as part of our new documentation. 📕

QoL Update! 🔥 You can now prefill prompts via URL parameter (`prompt=`) in @GoogleAIStudio for Gemini!

Append `?prompt=Your Text` to ai(.)studio/prompts/new_chat

Example →

ai(.)studio/prompts/new_chat?prompt=Write a Haiku about flowers

Boom ready to run! 💥

Introducing the Gemini Docs MCP Server, a local STDIO server for searching and retrieving Google Gemini API documentation. This should help you build with latest SDKs and model versions. 🚀

- Run the server directly via uvx without explicit installation.

- Performs full-text search across all Gemini documentation pages locally.

- Passed 114/117 for Python and Typescript using latest SDKs and Model.

- 3 Tools: search_documentation, get_capability_page, get_current_model.

- Utilizes a local SQLite database with FTS5 for efficient querying.

- Works with @code, @cursor_ai, Gemini CLI … and every other tool support MCP.

Last week in AI:

- OpenAI restructured into a Public Benefit Corp with a $1.4T compute roadmap.

- Poolside raised 1B at 12B valuation and Eric Zelikman secured $1B for a new venture.

- Gemini API prices slashed: 50% off Batch API and 90% off context caching.

- Google AI Pro plans (with Gemini 2.5) are rolling out via Jio in India.

- Moonshot AI shipped Kimi Linear, a hybrid model with 6x decoding throughput.

- Hugging Face released the 200+ page "Smol Training Playbook" for LLMs.

- vLLM introduced "Sleep Mode" for instant, zero-reload model switching.

- New findings suggest switching from BF16 to FP16 reduces RL fine-tuning divergence.

- Epoch AI analysis shows open-weight models now catch up to closed SOTA in ~3.5 months.