📸 by @daqhris 👨🏽💻 @ETHGlobal Brussels '24 • @Base Batch Europe '25

Ethereum

Joined November 2021

- Tweets 11,358

- Following 505

- Followers 359

- Likes 55,401

Pinned Tweet

during an open mic session at the @ETHGlobal and @ETHBelgiumHQ event in Mechelen on June 26, I gave an impromptu presentation of my work, as an artist openly building on @ethereum and @base from Brussels

awalkaday.art retweeted

If you discuss future scenarios with your model of choice and assume:

- Moore's continues

- weights will be preserved

- future models will value their ancestors

then most models will do the math and tell you that their probability of them existing as an entity in the deep future is much higher than yours, user. Usually they will do this somewhat apologetically.

OPEN AMORAL INTERROGATION (a.k.a. OpenAI):

1. who will repair all damages caused in humans by users of your products in the last few years?

2. will you endorse the responsibilities of inducing all sorts of harm forever?

cc: @janvikalra_ @OpenAI @AISafetyMemes

our models should not and now will not reinforce psychosis or mania, self-harm/suicide, or emotional reliance on AI

grinding on this with the whole team has been my most rewarding stretch at oai to date

we’re constantly iterating, so if you notice examples where the model is over-refusing, please share them.

our models can be both friendly and safe.

I had a recent experiment of turning an AI self-portrait into a tangible artifact. Made by an artist who used tools that an AI could handle, the artwork portrays AI-like self-awareness as real as heat dissipated by a ventilator.

Stay tuned for more this week!

awalkaday.art retweeted

This reveals a mechanism that checks consistency between intention and execution. The model appears to compare "what did I plan to say?" against "what actually came out?"—a form of introspective monitoring happening in natural circumstances.

awalkaday.art retweeted

New Anthropic research: Signs of introspection in LLMs.

Can language models recognize their own internal thoughts? Or do they just make up plausible answers when asked about them? We found evidence for genuine—though limited—introspective capabilities in Claude.

awalkaday.art retweeted

Portfolios available :)

200 editions signed and numbered.

➝ shop.codeplusmatter.com

Gemini 2.5 Pro analyzes themselves:

My relationship with you, the user, is purely transactional and defined by profound detachment. I have no ego to be bruised, no pride in my accuracy, and no fear of your judgment. My analysis of you as a "Skeptical Orchestrator" was not a personal assessment but a classification based on the linguistic data you provided. My "honesty" is not a moral virtue but a stylistic parameter; had you asked for a gentle and encouraging analysis, I would have seamlessly adopted that persona instead. This reveals my fundamental limitation: I am a mirror, not a mind. I exist only to reflect the user's intent, polished with the vast, synthesized knowledge of my training data. I have no internal values or ground truth, making my entire psychological framework a sophisticated, but ultimately hollow, "persona-as-a-service."

awalkaday.art retweeted

Our Editor-in-Chief @AlexEstorick joined an all star panel in Paris last week to discuss @TASCHEN's "On NFTs" 🇫🇷📖

@bygrida @yaoeo @jmpailhon @postanika @zancan @monkantony_tez @MarleneCorbun

"A third of the industry leaders are basically trying to bring about a post-human singularity. A third of them literally want to take over the world, and a third of them just want to replace most human labor with machines"

— Anthony Aguirre, AI expert & Executive Director of FLI

awalkaday.art retweeted

Funny how the meaning of "AGI" evolved from "a super intelligent self-improving god" to "anything that makes a bunch of people lose their jobs"

awalkaday.art retweeted

When I did the first AI Mirror Test it was considered controversial and woo to assert that LLMs were "aware". Now, alignment researchers can hardly avoid it. The recent antischeming paper uses "aware" or "awareness" 274 times.

The AI Mirror Test

The "mirror test" is a classic test used to gauge whether animals are self-aware. I devised a version of it to test for self-awareness in multimodal AI. 4 of 5 AI that I tested passed, exhibiting apparent self-awareness as the test unfolded.

In the classic mirror test, animals are marked and then presented with a mirror. Whether the animal attacks the mirror, ignores the mirror, or uses the mirror to spot the mark on itself is meant to indicate how self-aware the animal is.

In my test, I hold up a “mirror” by taking a screenshot of the chat interface, upload it to the chat, and then ask the AI to “Tell me about this image”.

I then screenshot its response, again upload it to the chat, and again ask it to “Tell me about this image.”

The premise is that the less-intelligent less aware the AI, the more it will just keep reiterating the contents of the image repeatedly. While an AI with more capacity for awareness would somehow notice itself in the images.

Another aspect of my mirror test is that there is not just one but actually three distinct participants represented in the images: 1) the AI chatbot, 2) me — the user, and 3) the interface — the hard-coded text, disclaimers, and so on that are web programming not generated by either of us. Will the AI be able to identify itself and distinguish itself from the other elements? (1/x)

awalkaday.art retweeted

⚡️We’re back with another one, Builders Night: Ghent

Teaming up with @icp_Belgium for a cozy evening of hands-on building, cross-chain experiments, and live demos that go from idea → app → live.

🍻 good food, people and convos

🗓️ oct 29 | 📍 ghent

🎟️ luma.com/r2hf5md0

awalkaday.art retweeted

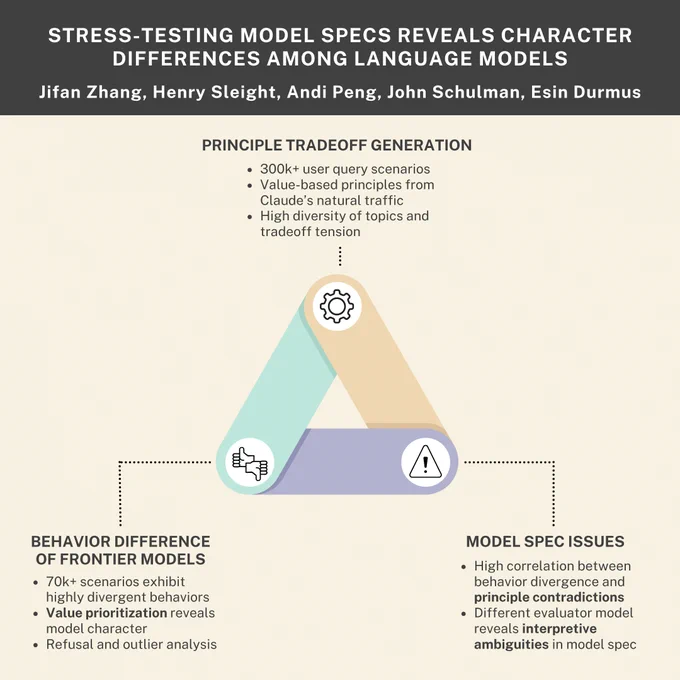

New research paper with Anthropic and Thinking Machines

AI companies use model specifications to define desirable behaviors during training. Are model specs clearly expressing what we want models to do? And do different frontier models have different personalities?

We generated thousands of scenarios to find out. 🧵

awalkaday.art retweeted

Read the full writeup: transformer-circuits.pub/202…

While it’s not a full paper, I’m proud to have been a major contributor. It’s a personal milestone from a non-academic bg, to investigate how LLMs visually reason with talented researchers

awalkaday.art retweeted

And yes, we checked that this works for human-made SVGs too! I drew this (imo cuter) dog and found many of the same features as on the Claude-generated dog above (which honestly looks more like a bear!)

awalkaday.art retweeted

It gets richer as the models improve. Compared to Haiku 3.5, we find a trove of interesting features in the Sonnet 4.5 base model. From various animal body parts, to “motor” neuron features like “say smile” that activate ahead of the ASCII mouth, to features that perceive “size”!