Joined May 2022

- Tweets 650

- Following 235

- Followers 31

- Likes 753

kiệt trần tuấn retweeted

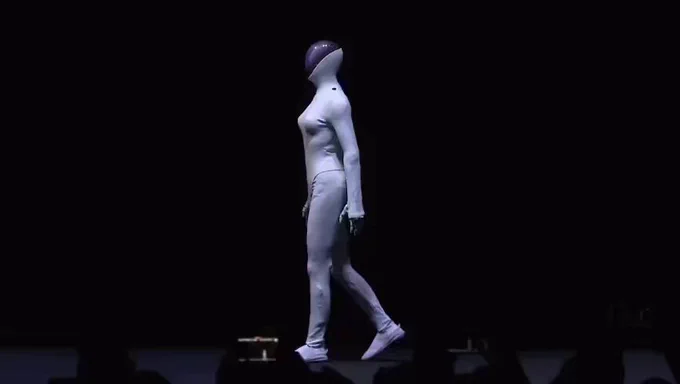

wow.. the uncanny valley of motion is closing. 🤯

The humanoid robot by China's Xpeng @XPengMotors

From 5 meters your brain says “person”, then remembers it is a robot.

Weight shifts, small corrections, no stiffness.

A walking beauty 🫡

🦿Xpeng showed a humanoid robot called IRON whose movement looked so human that the team literally cut it open on stage to prove it is a machine.

IRON uses a bionic body with a flexible spine, synthetic muscles, and soft skin so joints and torso can twist smoothly like a person.

The system has 82 degrees of freedom in total with 22 in each hand for fine finger control.

Compute runs on 3 custom AI chips rated at 2,250 TOPS (Tera Operations Per Second), which is far above typical laptop neural accelerators, so it can handle vision and motion planning on the robot.

The AI stack focuses on turning camera input directly into body movement without routing through text, which reduces lag and makes the gait look natural.

Xpeng staged the cut-open demo at AI Day in Guangzhou this week, addressing rumors that a performer was inside by exposing internal actuators, wiring, and cooling.

Company materials also mention a large physical-world model and a multi-brain control setup for dialogue, perception, and locomotion, hinting at a path from stage demos to service work.

Production is targeted for 2026, so near-term tasks will be limited, but the hardware shows a serious step toward human-scale manipulation.

kiệt trần tuấn retweeted

Singapore’s scientists have developed a breakthrough so simple, yet so powerful: a paint that cools buildings without using a single watt of power.

This “smart paint” reflects up to 98% of solar heat, keeping surfaces up to 5°C cooler than ambient air. Made from ceramic nanoparticles and reflective polymers, it forms a passive cooling layer that can last for decades — cutting air-conditioning costs dramatically.

In tropical cities, where energy use surges from heat, this could mean millions of tons of carbon emissions avoided every year. The paint works on concrete, metal, and glass, and it’s already being tested on skyscrapers, buses, and even aircraft hangars.

kiệt trần tuấn retweeted

Nano Banana 2 by Google generated this page.

But it didn't just generate the web page in the browser. The entire web browser is generated too, and the desktop as well. It's all just one generated image!

Impressed?

kiệt trần tuấn retweeted

glycine makes you 2x more beautiful…

got pimples, dry skin or redness?

you might just be missing this

glycine:

– reduces inflammation & redness

– improves skin hydration & elasticity

– builds collagen (your skins building-block)

studies show up to 200% more collagen production with consistent glycine intake

imagine spending a FORTUNE on skincare

when you could pay a fraction for real results.

kiệt trần tuấn retweeted

This is pretty amazing

Scientists May Have Found a Way to Rejuvenate the Immune System. New pill is Reversing Immune Aging in Humans in just few weeks. 👀

Swiss biotech Amazentis SA just showed exciting clinical results for its longevity compound Mitopure, a purified form of urolithin A, the so called "youth molecule".

In a 4 week human trial, participants taking Mitopure showed rejuvenated immune cells with a younger metabolic signature and stronger cellular activity.

The supplement was safe, well tolerated, and previously shown to boost muscle strength in older adults.

Scientists believe it may help counteract immune aging by promoting mitochondrial renewal, hinting at one of the most promising anti aging breakthroughs yet.

Few days ago skin and heart now immune system.

We will be able to rejuvenate all parts of human body by 2030s.

kiệt trần tuấn retweeted

「数える」だけのAIと思うなかれ。

YOLOv12を活用して、錠剤の動きをリアルタイムで追跡。識別IDを保ったまま正確にカウントする。

人間の「数える精度」を上回る仕組みが、もう動いてる。コードは🧵

Grok 4 Fast has a massive 2M token context window that unlocks enterprise-scale reasoning

It easily remembers ultra-long chats, huge codebases, or hundreds of pages in a single go

The best part? xAI just updated it with massive performance gains:

Reasoning mode: 77.5% ➝ 94.1%

Non- Reasoning: 77.9% ➝ 97.9%

Grok 4 Fast delivers near-flagship results with up to 98% lower cost

kiệt trần tuấn retweeted

This looks fantastic, MicroFactory DevKit for Robots.

The big idea is to standardize the physical workcell, then scale imitation learning across identical copies.

A single, tightly controlled, exactly reproduced robot hardware cell removes the environmental randomness that usually breaks imitation learning.

With the same fixtures, sensors, lighting, and calibration, demonstrations gathered in 1 cell can be reused, and the trained policy transfers to every cloned cell with little extra data.

Sharply reduces domain shift and makes data and policies reusable across sites.

Great to see GLM-4.6 powering Cerebras Code.

This is exactly why we open weights: so teams can combine their own infra and ideas with GLM capabilities, and bring more choices to developers worldwide.

Huge welcome to all partners building on GLM. Let’s grow the ecosystem together.

kiệt trần tuấn retweeted

動画生成AIの「速度の壁」が、またひとつ崩れた。

Bytedanceの新モデル「InfinityStar」は、空間×時間を統一的に扱う8Bパラメータの時空間オートレグレッシブモデル。5秒720p動画をわずか数秒で生成。

VBenchスコア83.74でSOTAを更新。DiT比で約10倍速。リンクは🧵

kiệt trần tuấn retweeted

🚨 Everyone’s obsessed with “new AI regulation.” Oxford just dropped a bomb: we don’t need new rules we already have them.

Here’s the uncomfortable truth the world doesn’t need another 500-page “AI safety” manifesto. We just need to use the standards that already keep planes from falling, nukes from melting, and banks from collapsing.

Oxford’s latest study says AI isn’t some alien life form that needs an entirely new playbook. It’s just another high-risk technology and we already have global systems for that. ISO 31000. ISO/IEC 23894. The same frameworks that manage billion-dollar risks in energy, aviation, and finance.

Translation: instead of reinventing regulation, we should plug AI into the safety infrastructure that already works.

Because right now, Big AI labs like OpenAI, Anthropic, and DeepMind are running their own private “safety frameworks.” They decide when a model is too powerful. They decide when to pause. They decide when to release.

That’s like Boeing regulating itself after building the 737 MAX.

Oxford’s point? Don’t burn the system down — evolve it. Merge AI’s fast-moving internal guardrails with the slow, proven machinery of global safety standards. Make AI auditable, comparable, and accountable like every other life-critical industry.

When an AI model suddenly shows new reasoning abilities or multi-domain behavior, that should automatically trigger a formal review not a PR tweet.

That’s how you scale trust. That’s how you make AI safety operational, not theoretical.

Oxford’s stance is clear:

AI doesn’t need prophets. It needs process.

🔥 So here’s the real question do you think governments and AI labs can actually build a shared language of risk, or will they keep talking past each other until it’s too late?

kiệt trần tuấn retweeted

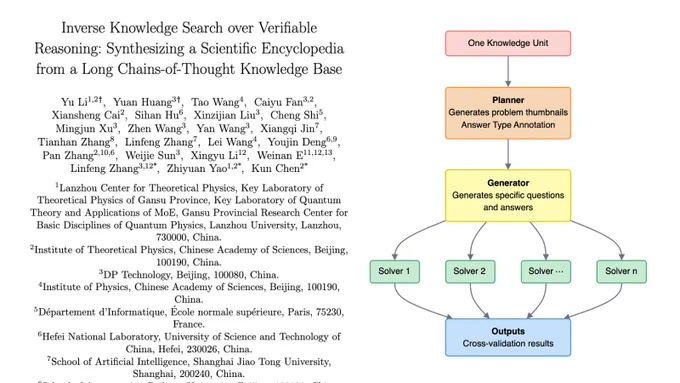

🚨 This might be the most mind-bending AI breakthrough yet.

China just built an AI that doesn’t just explain how the universe works it actually understands why.

Most of science is a black box of conclusions. We know the “what,” but the logic that connects everything the “why” is missing. Researchers call it the dark matter of knowledge: the invisible reasoning web linking every idea in existence.

Their solution is unreal.

A Socrates AI Agent that generates 3 million first-principles questions across 200 fields, each one answered by multiple LLMs and cross-checked for logical accuracy.

What it creates is a Long Chain-of-Thought (LCoT) knowledge base—where every concept in science can be traced back to its fundamental truths.

Then they went further.

They built a Brainstorm Search Engine for inverse knowledge discovery.

Instead of asking “What is an Instanton?”, you can explore how it emerges through reasoning paths that connect quantum tunneling, Hawking radiation, and 4D manifolds.

They call it:

“The dark matter of knowledge, finally made visible.”

The project SciencePedia already maps 200K verified scientific entries across physics, math, chemistry, and biology.

It shows 50% fewer hallucinations, denser reasoning than GPT-4, and traceable logic behind every answer.

This isn’t just better search.

It’s the first AI that exposes the hidden logic of science itself.

Comment “Send” and I’ll DM you the paper.

Woah, this is cool! Thank you for building in the open with the community!

🚀 Introducing SGLang Diffusion — bringing SGLang’s high-performance serving to diffusion models.

⚡️ Up to 5.9× faster inference

🧩 Supports major open-source models: Wan, Hunyuan, Qwen-Image, Qwen-Image-Edit, Flux

🧰 Easy to use via OpenAI-compatible API, CLI & Python API

Built with FastVideo to power the full diffusion ecosystem, and special thanks to @NVIDIAAIDev and @VoltagePark for their compute support!

⬇️Read more in the thread:

kiệt trần tuấn retweeted

🚨BREAKING: Elon Musk has dropped a bombshell, publicly calling for the arrest of politicians involved in a massive money laundering scheme of taxpayer money through non-governmental organizations. He calls for Attorney General Pam Bondi to "put the hammer down" on the culprits.

Do you support this?

IF you support this, Give me a THUMBS-UP👍!

kiệt trần tuấn retweeted

We improved our ARC AGI2 score to 29.72%, which is the new SOTA for the ARC AGI2.

This score is not legit for the arc prize competition because we got it after the deadline. It is legit for the ARC AGI leaderboard because the test data is the same.

Our solution costs $0.21% per task, when the previous SOTA of 29.40% cost $30 per task.

We'll disclose our method on Dec 5th, when the arc prize competition results are unveiled.

I took the first place on @arcprize @kaggle competition with my colleague Ivan Sorokin. These are preliminary results only. Final results will be available on Dec 5th. We may drop from first place as our code will be evaluated on a different set of challenges.

Nevertheless, this is a somewhat unexpected result for us. We climbed fast during the last week, but topping the leaderboard was just a wild dream.

We will open source our solution and share what we did. Stay tuned.

Note that our score can be compared to the scores from the arc-agi leaderboard. We crush SOTA LLMs. Best one, GPT5 Pro, has a score of 18.3% vs our 27.7%. And we get it at a tiny fraction of the cost because we run within Kaggle compute constrains (12 hours with 4 L4 GPUs)

kaggle.com/competitions/arc-…

kiệt trần tuấn retweeted

the mysterious model on OpenRouter named "polaris alpha" is likely from OpenAI

they're following a similar pattern to the GPT-4.1 summer pre-release called "quasar alpha"

it's probably a non-reasoning model with a large context window

but more leaks include:

GPT-5.1 reasoning

GPT-5.1 pro

kiệt trần tuấn retweeted

Meta made an acquisition offer to Reseracher for $1 billion – Google offered almost $3 billion.

Google’s $2.7 billion acquisition of CharacterAI brought back AI pioneer Noam Shazeer - but his outspoken posts on gender, Gaza, and politics have ignited internal strife.

Shazeer’s comments have repeatedly been deleted by moderators, drawing rebukes from top leaders like Jeff Dean and fueling debates over free speech, workplace inclusion, and Google’s evolving culture, says TheInformation. As the architect of major Gemini model breakthroughs, Shazeer’s brilliance now clashes with Google’s push to limit political and divisive speech at work.