Data Scientist

East Java, Indonesia

Joined March 2018

- Tweets 48,325

- Following 1,083

- Followers 419

- Likes 623

Salman King retweeted

Here are some slides I made for a short tutorial on Non-Euclidean gradient descent and these variants: docs.google.com/presentation…

Salman King retweeted

When you also throw truncation in the mix, that gives a lot of possible variants of Muon. We tried out many, here are some of the winners (including Scion)

Salman King retweeted

So much of biomedical research rests on tacit knowledge—unwritten and invisible to LLMs—causing agents to underperform.

The Biomni Open Know-How Catalogue bridges that gap: a curated library of human expertise that Biomni agents use intelligently on the fly, with dramatic gains.

Build together: biomni.stanford.edu/blog/bio…

Salman King retweeted

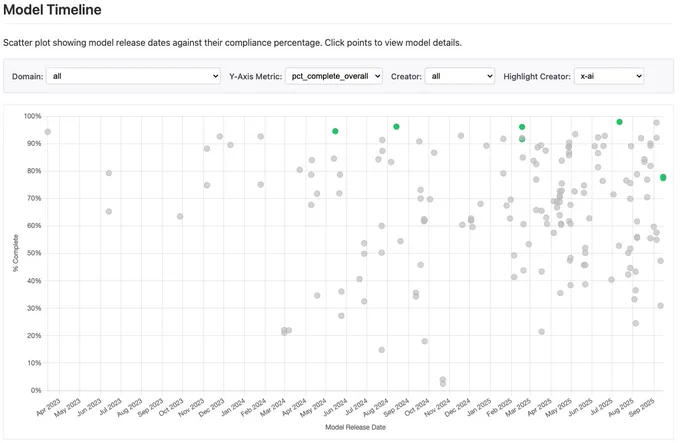

Someone from xAI reached out and asked me to retest grok-4-fast, because they've improved the injected system prompts. Huge improvement!

grok-4-fast-reasoning: 77.5% -> 94.1%

grok-4-fast-non-reasoning: 77.9 -> 97.9%

I really appreciate that xAI takes this topic seriously.

Salman King retweeted

💥The future of protein AI isn't 3D, it's 4D💥

PTraj-Diff = SE(3)-diffusion (geometry) + BERT encoder (time)

A custom TPA (90% mem reduction) makes it feasible.

The result: high-quality, long-range trajectories seeded directly from static AF3 folds

Salman King retweeted

If you are working on real-to-sim, simulating digital twins, and policy evaluation, you should check out our fully open-sourced code base.

Lots of handy tools for building Gaussian Splatting simulators and interacting with it!

github.com/kywind/real2sim-e…

Will continue to be maintained and expanded.

Salman King retweeted

verifiers v0.1.7 is released 🚀

this one's all about making RL training and experimentation waaaay easier:

- single-command installation for prime-rl

- single-command training w/ unified configs

- overhauled vf.RLTrainer for hacking on new algorithms

quick demo + links below :)

Salman King retweeted

Finished "Neuroscience" lecture series 🥳

started on "Manifold learning", same teacher

aka "dimensionality reduction"

I always thought in ML when we say "this data is 1000s of dimensions" etc, that this is the accurate dims of representation of it and dim reduction is an approximation so our eyes can perceive it at some level

But actually, the true representation of a 1000 dimensional data can be 50 dimensions perhapsl or even 3 or 2

so those 1000s Dims are just the space that represents it but that doesn't mean that is the most meaningful representation

this is a quick introductory course and I highly recommend it. only 7 short lectures

She really teaches well and the lecture talks about methods as well like t-SNE, UMAP, PCA etc

I will put a link in comment

Joy of life-long learning day 77...

Almost done with Neuroscience. But will pick up another course on it once finished

Intro to Neuroscience: 95% - Today: 6%

LLM training-Stanford Lecture: 8% - Today: 3%

--------

Fourier Analysis: 22% - Today: 0%

Discrete Mathematics: 33% - Today: 0%

Fundamentals of physics 1: %44 - Today: 0%

Vector Calculus & PDEs: 40% - Today: 0%

Dynamical systems: 36% - Today: 0%

Probability & Statistics: 16% - Today: 0%

Linear Algebra 3/3 30% - Today: 0%

Information Theory: 6% - Today: 0%

Applied Calculus with Python: 32% - Today: 0%

HF LLM training book: 0% - Today: 0%

-------

✅Calculus 1: 100%

✅Calculus 2: 100%

✅Ordinary Differential equations: 100%

✅Linear Algebra 1/3: Systems and Matrix Equations 100%

✅Linear Algebra 2/3: Matrix Algebra, Determinants, & Eigenvectors 100%

✅Introduction to probability: 100%

Salman King retweeted

🚨 Big news in the AI world!

Baidu’s ERNIE-5.0-Preview-1022 just scored 1432 on the latest LMArena Text Leaderboard, making it #1 in China and #2 globally!

The model really stands out in creative writing, complex reasoning, and following instructions.

The ERNIE-5.0 foundation model is reportedly set to launch at Baidu World 2025 on November 13. The ERNIE series has gone through years f continuous improvement — from multimodal models like ERNIE 4.5 and 4.5 Turbo to deep-thinking models ERNIE X1, X1 Turbo, and X1.1 — consistently leading the way in Chinese large language models.

👉 Check out the leaderboard here: lmarena.ai/leaderboard/text

#AI #LLM #ERNIE5 #BaiduWorld2025 #MachineLearning #Innovation

Salman King retweeted

Hope.

Introducing Nested Learning: A new ML paradigm for continual learning that views models as nested optimization problems to enhance long context processing. Our proof-of-concept model, Hope, shows improved performance in language modeling. Learn more: goo.gle/47LJrzI

@GoogleAI

Salman King retweeted

Squidiff: a diffusion-based model to predict transcriptome response to perturbations.

nature.com/articles/s41592-0…

Salman King retweeted

Today’s edition of my newsletter just went out.

🔗 rohan-paul.com/p/alibaba-bac…

Consider subscribing, its free, and I write it everyday.

🇨🇳 Alibaba-backed Moonshot releases Kimi K2 Thinking

🏆 New Stanford+Oxford and other top university study just dropped highlighting flaws in AI benchmarking.

⚖️ Amazon has sued Perplexity alleging that Perplexity’s Comet browser runs an AI shopping agent that logs into Amazon with a user’s credentials.

📈 Google is finally rolling out its most powerful Ironwood AI chip, first introduced in April, taking aim at Nvidia in the coming weeks.

⚖️ 📈 Microsoft Research released Magentic Marketplace, an open-source environment that lets people test how LLM agents buy, sell, negotiate, and pay at scale, revealing real issues in discovery, fairness, and safety.

📈 Edison Scientific launched Kosmos, an autonomous AI researcher that reads literature, writes and runs code, tests ideas.

Salman King retweeted

Named Entity Recognition is a tool that helps you pick out important terms in text.

It's helpful for extracting meaningful insights from large bodies of text, for example.

In this tutorial, Manish teaches you how it works by building a news analyzer that uses a transformer-based NER model to grab data from a live RSS feed.

freecodecamp.org/news/extrac…

Salman King retweeted

It's rare nowadays to find something that is intuitively important and not yet done well by any major language models.

But *precisely aggregating lots of information over long contexts* is one of those things.

Our new benchmark Oolong tests this ability, see the 🧵 for more!

Salman King retweeted

China will surpass the US in AI.

They just dropped an open-source model that beats GPT-5 and Claude on reasoning benchmarks.

Kimi K2 Thinking from Moonshot AI posted 44.9% on Humanity's Last Exam when GPT-5 only hit 33%. Beat Claude Sonnet 4.5 on competitive programming. Crushed both on agentic search and coding tasks.

This isn't some research lab demo. The model executes 200-300 sequential tool calls without human interference, has a 256K context window, and went live on their platform yesterday with full API access.

Moonshot AI is a 2-year-old startup founded by ex-Tsinghua researchers. No state backing. No CCP money printing their runway. Just 200 engineers in Beijing building test-time scaling that actually works.

The model weights are on Hugging Face right now. Open source. Anyone can run it.

While American AI labs fight over safety theater and regulatory capture, China is shipping production-grade reasoning models faster than we can benchmark them.

DeepSeek taught them how to train cheap, now Moonshot showed them how to scale reasoning without burning $100M per training run.

The gap is closing faster than anyone wants to admit.

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai