ML consultant. MASc @UofT, @Mila_Quebec accepted & deferred. Building @OpenAdaptAI, an app that learns to automate tasks in other apps.

Planet Earth

Joined October 2009

- Tweets 552

- Following 506

- Followers 317

- Likes 1,444

Got a 7,000% traffic spike yesterday. Turns out it was a bot network 'advertising' itself by spamming my analytics. You can even set your own bounce rate 😂 Growth hacking has gone full parody.

Richard Abrich retweeted

I've already changed how I build with AI after chatting with @abrichr!

So cool getting insight to how tools like this are built

Check it out!

Want to know how to build a Prompt-to-App tool?

@abrichr just released Fastable, a BYOK full-stack app builder

Learn why Fastable is different than the big names and also his advice on

- developer workflows

- context engineering

- hallucination mitigation

This is another can't-miss episode, check it out!

Richard Abrich retweeted

Want to know how to build a Prompt-to-App tool?

@abrichr just released Fastable, a BYOK full-stack app builder

Learn why Fastable is different than the big names and also his advice on

- developer workflows

- context engineering

- hallucination mitigation

This is another can't-miss episode, check it out!

Richard Abrich retweeted

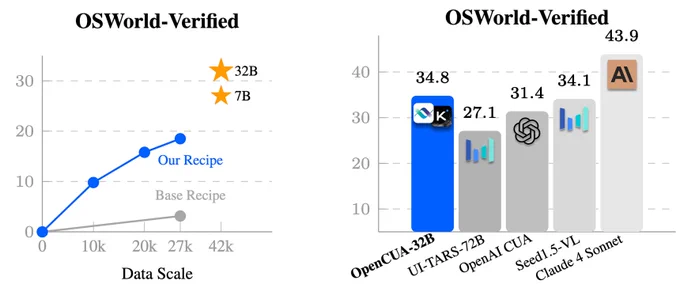

We are super excited to release OpenCUA — the first from 0 to 1 computer-use agent foundation model framework and open-source SOTA model OpenCUA-32B, matching top proprietary models on OSWorld-Verified, with full infrastructure and data.

🔗 [Paper] arxiv.org/abs/2508.09123

📌 [Website] opencua.xlang.ai/

🤖 [Models] huggingface.co/xlangai/OpenC…

📊[Data] huggingface.co/datasets/xlan…

💻 [Code] github.com/xlang-ai/OpenCUA

🌟 OpenCUA — comprehensive open-source framework for computer-use agents, including:

📊 AgentNet — first large-scale CUA dataset (3 systems, 200+ apps & sites, 22.6K trajectories)

🏆 OpenCUA model — open-source SOTA on OSWorld-Verified (34.8% avg success, outperforms OpenAI CUA)

🖥 AgentNetTool — cross-system computer-use task annotation tool

🏁 AgentNetBench — offline CUA benchmark for fast, reproducible evaluation

💡 Why OpenCUA?

Proprietary CUAs like Claude or OpenAI CUA are impressive🤯 — but there’s no large-scale open desktop agent dataset or transparent pipeline. OpenCUA changes that by offering the full open-source stack 🛠: scalable cross-system data collection, effective data formulation, model training strategy, and reproducible evaluation — powering top open-source models including OpenCUA-7B and OpenCUA-32B that excel in GUI planning & grounding.

Details of OpenCUA framework👇

Richard Abrich retweeted

🙌 Acknowledgement: We thank @ysu_nlp, @CaimingXiong , and the anonymous reviewers for their insightful discussions and valuable feedback. We are grateful to Moonshot AI for providing training infrastructure and annotated data. We also sincerely appreciate Jin Zhang, Hao Yang, Zhengtao Wang, and Yanxu Chen from the Kimi Team for their strong infrastructure support and helpful guidance. The development of our tool is based on the open-source projects DuckTrack @arankomatsuzaki and @OpenAdaptAI we are very grateful for their commitment to the open-source community.

Finally, we extend our deepest thanks to all annotators for their tremendous effort and contributions to this project. ❤️

Richard Abrich retweeted

Garbage in, garbage out

@abrichr wants you to manage your context

🔥The best AI advice for 2025🔥

Two dozen of the top minds in the AI space share their top advice and lessons learned from the passed year on this special episode of Tool Use.

You will not want to miss this one, link in comments

Featuring the brilliant:

@ryancarson

@abrichr

@kubla

@dexhorthy

@diamondbishop

@dillionverma

@freddie_v4

@WolframRvnwlf

@ja3k_

@SarahAllali7

@fpingham

@JungMinki7

@haithehuman

@_jason_today

@sunglassesface

@cj_pais

@KirkMarple

@adamcohenhillel

@aaronwongellis

@IanTimotheos

@FieroTy

Richard Abrich retweeted

🔥The best AI advice for 2025🔥

Two dozen of the top minds in the AI space share their top advice and lessons learned from the passed year on this special episode of Tool Use.

You will not want to miss this one, link in comments

Featuring the brilliant:

@ryancarson

@abrichr

@kubla

@dexhorthy

@diamondbishop

@dillionverma

@freddie_v4

@WolframRvnwlf

@ja3k_

@SarahAllali7

@fpingham

@JungMinki7

@haithehuman

@_jason_today

@sunglassesface

@cj_pais

@KirkMarple

@adamcohenhillel

@aaronwongellis

@IanTimotheos

@FieroTy

Call for Speakers for AI Engineer World's Fair 2025 is open on Sessionize and I've just submitted a session! sessionize.com/ai-engineer-w…

https://openai .com/index/introducing-o3-and-o4-mini/

> This unlocks a new class of problem-solving that blends visual and textual reasoning, reflected in their state-of-the-art performance across multimodal benchmarks.

"describe_element" tool, with description="restart button":

> "Found icon with content 'restart button' at bounds (0.6190802454948425, 0.33572784066200256, 0.35580..."

> Given that:

> ...

> 2. The tests for this refactor are passing.

> ...

> 4. It's currently Friday evening.

>

> Recommendation: Leave the VisualState file move for the next PR.

-- #Gemini 2.5 Pro experimental

😂

OmniMCP in the MCP inspector, calling the "get_screen_state" tool

#MCP

> Yes, I think it is definitely time to start a fresh context. Your suggestion is excellent. Please start a new chat session and use the following summary prompt to get us back on track with the correct current state.

-- Gemini 2.5 Pro (experimental)

🤌

Longest context by far!

Richard Abrich retweeted

Anybody looking for a GUI+ICL-->MCP library should definitely check out OmniMCP which puts Microsoft's Omniparser to use in generating GUI tool use APIs. Early days but pretty neat

omnimcp.openadapt.ai/

Richard Abrich retweeted

OmniMCP – A server that provides rich UI context and interaction capabilities to AI models, enabling deep understanding of user interfaces through visual analysis and precise interaction via Model Context Protocol. via /r/mcp ift.tt/2Giwlug ift.tt/AaRYgeU

⏺ Testing OmniMCP core functionality with synthetic UI...

✅ Visual state parsing test passed

Elements: [('button', 'Submit'), ('text_field', 'Username'), ...]

✅ Element finding test passed

✅ Action verification test passed

✅ All core tests passed!

#agentnative

#llmnative