Fine-tune DeepSeek-OCR on your own language!

(100% local)

DeepSeek-OCR is a 3B-parameter vision model that achieves 97% precision while using 10× fewer vision tokens than text-based LLMs.

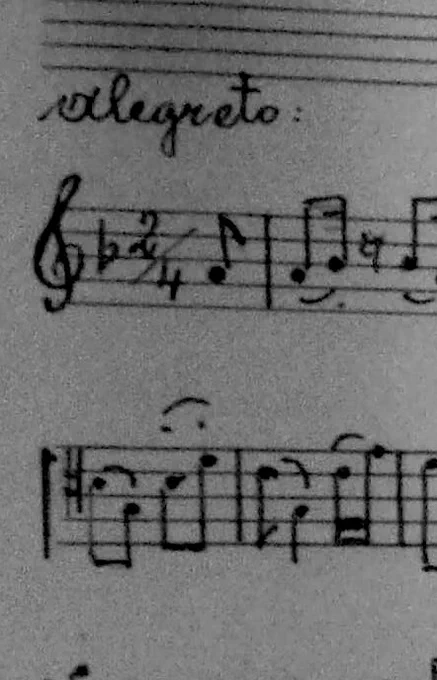

It handles tables, papers, and handwriting without killing your GPU or budget.

Why it matters:

Most vision models treat documents as massive sequences of tokens, making long-context processing expensive and slow.

DeepSeek-OCR uses context optical compression to convert 2D layouts into vision tokens, enabling efficient processing of complex documents.

The best part?

You can easily fine-tune it for your specific use case on a single GPU.

I used Unsloth to run this experiment on Persian text and saw an 88.26% improvement in character error rate.

↳ Base model: 149% character error rate (CER)

↳ Fine-tuned model: 60% CER (57% more accurate)

↳ Training time: 60 steps on a single GPU

Persian was just the test case. You can swap in your own dataset for any language, document type, or specific domain you're working with.

I've shared the complete guide in the next tweet - all the code, notebooks, and environment setup ready to run with a single click.

Everything is 100% open-source!

Nov 8, 2025 · 12:30 PM UTC

Tech Stack:

- @UnslothAI to run and fine-tune the model

- @LightningAI environments for hosting and deployment

Find all code and everything you need here: lightning.ai/lightning-purch…