Founder @ Byzantium Capital. Formerly: Value Voting, Google Inbox, Apture CTO, Stanford CS PhD dropout. I read a lot of books

Joined August 2008

- Tweets 5,390

- Following 242

- Followers 1,361

- Likes 37,195

Can Sar retweeted

This Anthropic post confirms what I’ve been seeing with MCP: loading all the definitions upfront burns tokens fast.

It also buried the lede that MCP creates context rot.

There's an easy fix but it requires us to do actual engineering rather than spray and pray: progressive disclosure through a good router.

Your agent gets exactly what it needs, when it needs it, without paying the context or token cost for unused tools.

New on the Anthropic Engineering blog: tips on how to build more efficient agents that handle more tools while using fewer tokens.

Code execution with the Model Context Protocol (MCP): anthropic.com/engineering/co…

This is sad to hear. Learn new tools people! This is by far the most exciting time in software land in the last 20 years.

Can Sar retweeted

I'm quite careful about "attention hygiene" during my working hours, but one thing this taught me is that "poor hygiene" in off-hours (even the previous day!) can really impact the quality of my awareness.

Can Sar retweeted

For a few years, I've had weekly 2-3hr readings for a course. It's a startling barometer: if I'd spent a while on X/YouTube/etc in the prior 48 hours, I'll want to distract myself every few minutes. But if I've been scrolling-free, it'll be easy to settle in for an unbroken hour!

Can Sar retweeted

Steve Newman gives a great overview of the gigantic factual disagreements underlying a lot of disagreement about AI policy

Can Sar retweeted

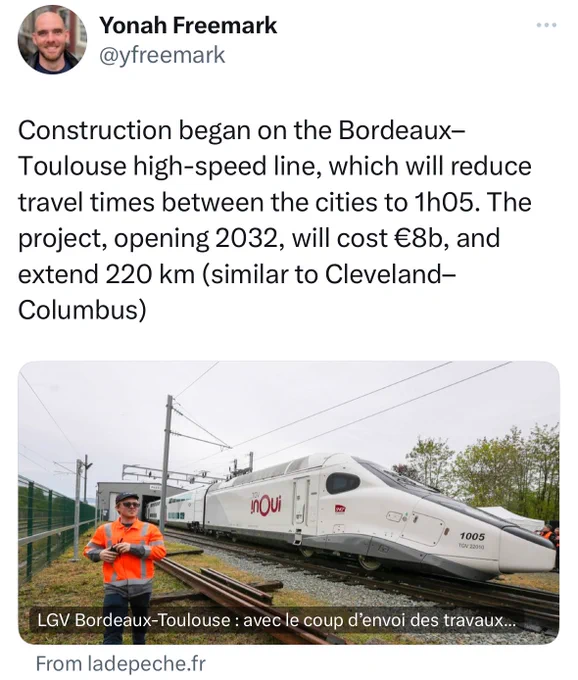

France is building 220km of high speed rail for the same cost as San Francisco building 3km.

Transit costs are one of the biggest barriers to America building world-class sustainable infrastructure, and we need our politicians to enact policies to lower construction costs.

Can Sar retweeted

This from @elidourado is still the best articulation of the specifics for an abundance agenda in Washington, DC

Can Sar retweeted

I'm not an effective altruist, but I find the recent genre of pieces like the below a bit strange. Essentially all of the major AI lab leaders agree that AI potentially poses enormous risks[1], as does a majority of the US public[2]. It's not a crank view. EA was simply among the very earliest organized groups to perceive and act on this risk. In addition, EA's contrarian concerns have elsewhere been shown to be reasonable: long before COVID, EAs stood out for their worry about major pandemics. Assessments that deride EA as a cult while failing to acknowledge these counterintuitive successes strike me as unreasonably uncharitable. If asked "which 2018 community now looks most prescient based on how the intervening 5 years unfolded?", I think it's hard to come up with better nominations than EA.

politico.com/news/2023/12/30…

[1] nytimes.com/2023/05/30/techn…

[2] rethinkpriorities.org/public…: a majority rate the proposition that AI will cause human extinction within 50 years as at least "slightly likely".

Can Sar retweeted

some excellent takes from @labenz on the latest episode of the @CogRev_Podcast that I just wanted to draw attention to

is the development of AGI really inevitable, and the singular way to advance human civilisation, or are these deeply ideological claims?

Can Sar retweeted

AFAICT everyone involved in this is both ridiculously smart and a good person and all deserve our love, respect, and sympathy for what is an incredibly stressful time.

Can Sar retweeted

This whole thing still happened for a reason, and OpenAI needs to do a much better job explaining it.

If internal concerns about upcoming capabilities — and how they were handled — really came to this boiling point, people need to know.

Can Sar retweeted

The whole OpenAI conversation is so infuriatingly stupid right now.

The OpenAI board needs to say was Sam was fired for right now. Period. Full stop.

If it was Ilya not liking being demoted, GTFO.

If it was them not liking Sam raising money for Nvidia competitors and AI phones, reasonable people might disagree but even those who view it as reasonable will have to admit it was massively bungled.

If it was because of GENUINE fear of the consequences of specific actions that betray the nonprofits mission, they need to be specific about what those actions were and where the fear emanates from.

And I'm sorry, they made this bed so hiding behind anything along the lines of "it would be too bad for the publicly to know" won't cut it.

The harm that the board is causing by NOT getting specific -- to the company AND to any sort of safety mission -- is hard to overstate.

Can Sar retweeted

Since I am now somewhat of a journalist in such matters, I should note that if anyone wants to provide any info (on or off record) on recent events at OpenAI, DMs are open.

Can Sar retweeted

It turns out that it’s hard to control the actions of a highly intelligent, hard-working, and charismatic agent.

Can Sar retweeted

Manhattan from 100 years ago looks like SF's Sunset and Richmond today. Imagine of Manhattanites back then had said "Sorry, we're built out!" That's what SF has been doing for the past few decades.

Can Sar retweeted

The reward for reading 20k words of an EO that is almost entirely calls for government reports is to read the diatribes about how it is government overreach and full of premature regulations and assumptions, when you spent hours confirming that nope, it's all report requests.