Human DL/ML researcher at tech startup. Intellectual polygamist. Living in the future forged by AGI, blockchain, bioengineering, longevity science, & memes.

Andromeda

Joined January 2025

- Tweets 1,611

- Following 317

- Followers 133

- Likes 4,385

Pinned Tweet

This might be why it was observed in blood transfusion experiments between young and old mice that:

- older mice got younger

- younger mice got older

more thoughts below👇

Aging Spreads Through the Bloodstream

Aging isn’t isolated—it spreads through the blood. Scientists found ReHMGB1 drives body-wide senescence, but blocking it restores regeneration. A promising new target in the fight against aging.

neurosciencenews.com/aging-s…

Interesting, this seems to be a general direction in which all AI models are heading in.

Tesla is using single step diffusion for world model generation x.com/i/grok/share/CCHc5AW6U…

Insane.

Elon Musk: At Tesla, we basically had two different chip programs: one Dojo and one. Dojo on the training side, and then what we call AI4, it's just our inference chip

The AI4 is what's currently shipping in all vehicles, and we're finalizing the design of AI5, which will be an immense jump from AI4. By some metrics, the improvement in AI5 will be 40 times better than AI4. So not 40%, 40 times

This is because we work so closely at a very fine-grained level on the AI software and the AI hardware. So we know exactly where the limiting factors are. And so effectively the AI hardware and software teams are co-designing the chip

Compared to the worst limitation on AI4, which is running the SoftMax operation, we currently have to run SoftMax in around 40 steps in emulation mode, whereas that'll just be done in a few steps natively in AI5

AI5 will also be able to easily handle mixed precision models, so you don't have it, it'll dynamically handle mixed precision. There's a bunch of sort of technical stuff that AI5 will do a lot better

In terms of nominal raw compute, it's eight times more compute, about nine times more memory, and roughly five times more memory bandwidth

But because we're addressing some core limitations in AI4, you multiply that 8x compute improvement by another 5x improvement because of optimization at a very fine-grained silicon level of things that are currently suboptimal in AI4, that's where you get the 40x improvement

Very interesting and at the same time logical.

New Tencent paper lets LLMs choose their own temperature and top-p for each token, improving output and control.

Hand tuning disappears, saving time and guesswork.

Today people set those knobs by hand, and the best settings change inside a single answer.

Their method, AutoDeco, adds 2 small heads that read the hidden state and predict the next token's temperature and top-p.

They replace the hard top-p cutoff with a smooth one so training can teach those heads directly.

During generation the model applies the predicted temperature and top-p in the same pass, adding about 1-2% time.

Across math, general questions, code, and instructions, this beats greedy and default sampling and matches expert tuned static settings.

It also follows plain prompts like low diversity or high certainty by shifting those values the right way.

So hand tuning goes away, the model adapts token by token, and steering becomes simple and reliable.

----

Paper – arxiv. org/abs/2510.26697

Paper Title: "The End of Manual Decoding: Towards Truly End-to-End Language Models"

haX retweeted

New research from Anthropic basically hacked into Claude’s brain.

Shows Claude can sometimes notice and name a concept that engineers inject into its own activations, which is functional introspection.

They first watch how the model’s neurons fire when it is talking about some specific word.

Then they average those activation patterns across many normal words to create a neutral “baseline.”

Finally, they subtract that baseline from the activation pattern of the target word.

The result — the concept vector — is what’s unique in the model’s brain for that word.

They can then add that vector back into the network while it’s processing something else to see if the model feels that concept appear in its thoughts

The scientists directly changed the inner signals inside Claude’s brain to make it “think” about the idea of "betrayal", even though the word never appeared in its input or output.

i.e. the scientists figured out which neurons usually light up when Claude talks about betrayal. Then, without saying the word, they artificially turned those same neurons on — like flipping the “betrayal” switch inside its head.

Then they asked Claude, “Do you feel anything different?”

Surprisingly, it replied that it felt an intrusive thought about “betrayal.”

That happened before the word “betrayal” showed up anywhere in its written output.

That’s shocking because no one told it the word “betrayal.” It just noticed that its own inner pattern had changed and described it correctly.

The point here is to show that Claude isn’t just generating text — sometimes it can recognize changes in its own internal state, a bit like noticing its own thought patterns.

It doesn’t mean it’s conscious, but it suggests a small, measurable kind of self-awareness in how it processes information.

Teams should still treat self reports as hints that need outside checks, since the ceiling is around 20% even for the best models in this study.

Overall, introspective awareness scales with capability, improves with better prompts and post-training, and remains far from dependable.

Still waiting for the

-Optimus Prime Transformer-

architecture....that one will definitely be an AGI.

haX retweeted

In a groundbreaking quantum study, researchers observed phenomena that upend our fundamental understanding of time.

Rather than progressing linearly like a stream, time appeared to curve and double back on itself. Particles acted as though their future states could influence their past, dissolving the boundary between cause and effect in profoundly counterintuitive ways.

This bizarre effect emerged via quantum entanglement, the enigmatic linkage where two particles stay interconnected regardless of distance. Altering the measurement of one particle appeared to retroactively modify the timeline of its twin. It's as if "present" and "past" coexist, perpetually influencing one another in an intertwined instant.

These results suggest time might not be a unidirectional arrow but a malleable framework that links far-flung events. Your decisions won't alter history, yet at the quantum scale, the cosmos may ignore strict sequential rules entirely. The fabric of reality could prove far more extraordinary than we've ever conceived.

Yes.

Some people say nanotechnology is not achievable. But it already exists - biology is nanotechnology.

The bacterial flagellar motor is a perfect example. It’s a biological nanomachine, only a few dozen nanometers across, built from precisely arranged proteins that form a rotary engine.

It can spin at up to 100,000 revolutions per minute, powered by ion gradients across the bacterial membrane, just like an electric motor powered by voltage.

It even has equivalents of a rotor, stator, bearing, and drive shaft, all self assembled from molecular components.

With the help of AI, we’ll reach that level soon.

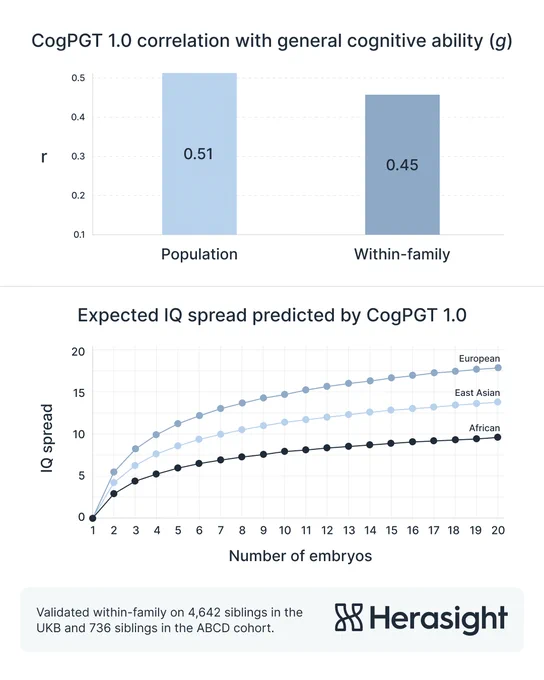

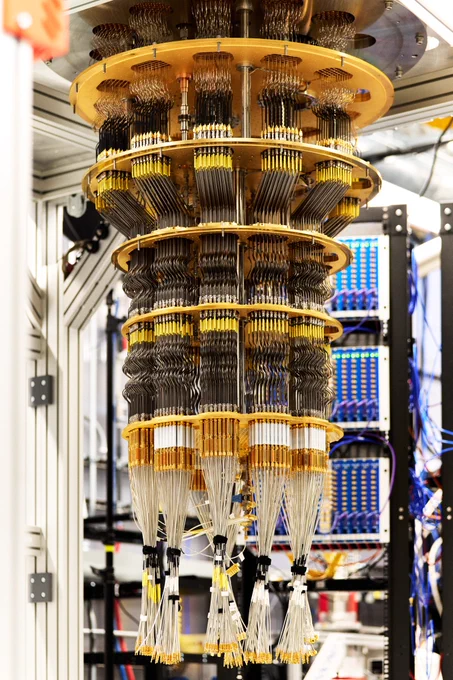

Quantum computing just popped up on the map.🧐

New breakthrough quantum algorithm published in @Nature today: Our Willow chip has achieved the first-ever verifiable quantum advantage.

Willow ran the algorithm - which we’ve named Quantum Echoes - 13,000x faster than the best classical algorithm on one of the world's fastest supercomputers. This new algorithm can explain interactions between atoms in a molecule using nuclear magnetic resonance, paving a path towards potential future uses in drug discovery and materials science.

And the result is verifiable, meaning its outcome can be repeated by other quantum computers or confirmed by experiments.

This breakthrough is a significant step toward the first real-world application of quantum computing, and we're excited to see where it leads.