Pinned Tweet

Pasión de Campeones

Cree después de varios intentos en @udiomusic, logre algo bastante coherente (solo utilizando el rondón junto el prompt base)

Aunque se detecten errores, no deja de ser asombroso tanto la letra, vocalización y sonido instrumental generada en cada fragmento generado y expandido.

Prompt: Estilo Tango tradicional Argentino y que narre sobre el equipo de futbol ganando la final contra Francia en Qatar y el fervor de un pueblo unidos por una pación

udio.com/songs/tLhZPTMjq1AZX…

Nicolás Catalogna retweeted

K-means is an essential algorithm for Data Science.

But it's confusing for beginners.

Let me demolish your confusion:

Advanced ML Courses- mltut.com/best-advanced-mach…

@KirkDBorne

@antgrasso

@ronald_vanloon

#MachineLearning #DeepLearning #BigData #Datascience #ML #HealthTech #DataVisualization #ArtificialInteligence #SoftwareEngineering #GenAI #deeplearning #ChatGPT #OpenAI #python #AI #keras #SQL #Statistics #AgenticAI #AIAgent

Nicolás Catalogna retweeted

👇a superb paper 👇

Patterns for securing agents against prompt Injection

arxiv.org/abs/2506.08837 🔖

Nicolás Catalogna retweeted

Explaining why BF16 → FP16 precision switch works for reinforcement learning (RL) ⬇️

Researchers from Sea AI Lab and @NUSingapore found that for RL precision matters most.

Typically, during RL fine-tuning, rounding errors and hardware-specific optimizations cause a mismatch between the training and inference engines - and RL becomes unstable.

So the researchers offered a simple change in precision format: from BF16 to the older FP16. Why did this work?

Both formats use 16 bits to represent numbers but distribute them differently:

- BF16 uses more exponent bits, giving it a wider numerical range, which works better for massive-scale pre-training.

- FP16 prioritizes mantissa (or fraction) bits - the part that stores numerical detail.

In rough numbers, FP16 gives ~8× more numerical precision than BF16.

So it successfully turns the focus to the precision at RL training stage, , when all the work with wide value ranges is already done.

For a deeper explanation, check out our new article: turingpost.com/p/fp16

Nicolás Catalogna retweeted

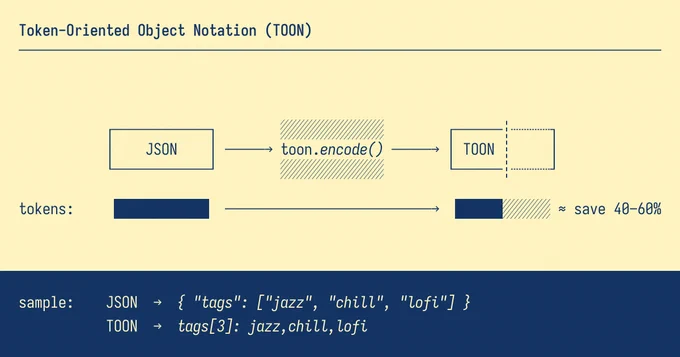

A simple trick cuts your LLM costs by 50%!

Just stop using JSON and use this instead:

TOON (Token-Oriented Object Notation) slashes your LLM token usage in half while keeping data perfectly readable.

Here's why it works:

TOON's sweet spot: uniform arrays with consistent fields per row. It merges YAML's indentation and CSV's tabular structure, optimized for minimal tokens.

Look at the example below.

JSON:

{

"𝘂𝘀𝗲𝗿𝘀": [

{ "𝗶𝗱": 𝟭, "𝗻𝗮𝗺𝗲": "𝗔𝗹𝗶𝗰𝗲", "𝗿𝗼𝗹𝗲": "𝗮𝗱𝗺𝗶𝗻" },

{ "𝗶𝗱": 𝟮, "𝗻𝗮𝗺𝗲": "𝗕𝗼𝗯", "𝗿𝗼𝗹𝗲": "𝘂𝘀𝗲𝗿" }

]

}

Toon:

𝘂𝘀𝗲𝗿𝘀[𝟮]{𝗶𝗱,𝗻𝗮𝗺𝗲,𝗿𝗼𝗹𝗲}:

𝟭,𝗔𝗹𝗶𝗰𝗲,𝗮𝗱𝗺𝗶𝗻

𝟮,𝗕𝗼𝗯,𝘂𝘀𝗲𝗿

It's obvious how few tokens are being used to represent the same information!

To summarise, here are the key features:

💸 30–60% fewer tokens than JSON

🔄 Borrows the best from YAML & CSV

🤿 Built-in validation with explicit lengths & fields

🍱 Minimal syntax (no redundant braces, brackets, etc.)

IMPORTANT!!

That said, for deeply nested or non-uniform data, JSON might be more efficient.

In the next tweet, I've shared some benchmark results demonstrating the effectiveness of this technique in reducing the number of tokens and improving retrieval accuracy with popular LLM providers.

Where do you think this could be effective in your existing workflows?

Find the relevant links in the next tweet!

Nicolás Catalogna retweeted

Supervised Fine-Tuning (SFT) + Reinforcement Learning with Verifiable Rewards (RLVR) = Supervised Reinforcement Learning (SRL)

Google Cloud AI Research introduced a new SRL training method that overcomes the issues of SFT and RLVR.

The main idea: it treats problem-solving as a sequence of logical actions.

Here is how it works:

Nicolás Catalogna retweeted

New @AIatMeta paper explains when a smaller, curated dataset beats using everything.

Standard training wastes effort because many examples are redundant or wrong.

They formalize a label generator, a pruning oracle, and a learner.

From this, they derive exact error laws and sharp regime switches.

With a strong generator and plenty of data, keeping hard examples works best.

With a weak generator or small data, keeping easy examples or keeping more helps.

They analyze 2 modes, label agnostic by features and label aware that first filters wrong labels.

ImageNet and LLM math results match the theory, and pruning also prevents collapse in self training.

----

Paper – arxiv. org/abs/2511.03492

Paper Title: "Why Less is More (Sometimes): A Theory of Data Curation"

Nicolás Catalogna retweeted

5 Agentic AI design patterns, explained visually!

Agentic behaviors allow LLMs to refine their output by incorporating self-evaluation, planning, and collaboration!

The visual depicts the 5 most popular design patterns for building AI Agents.

1️⃣ Reflection pattern

The AI reviews its own work to spot mistakes and iterate until it produces the final response.

2️⃣ Tool use pattern

Tools allow LLMs to gather more information by:

- Querying a vector database

- Executing Python scripts

- Invoking APIs, etc.

This is helpful since the LLM is not solely reliant on its internal knowledge.

3️⃣ ReAct (Reason and Act) pattern

ReAct combines the above two patterns:

- The Agent reflects on the generated outputs.

- It interacts with the world using tools.

Frameworks like CrewAI primarily use this by default.

4️⃣ Planning pattern

Instead of solving a task in one go, the AI creates a roadmap by:

- Subdividing tasks

- Outlining objectives

This strategic thinking solves tasks more effectively. In CrewAI, specify `planning=True` to use Planning.

5️⃣ Multi-Agent pattern

- There are several agents, each with a specific role and task.

- Each agent can also access tools.

All agents work together to deliver the final outcome, while delegating tasks to other agents if needed.

Those were the 5 most popular Agentic AI design patterns!

Which one do you use the most?

____

Find me → @_avichawla

Every day, I share tutorials and insights on DS, ML, LLMs, and RAGs.

Nicolás Catalogna retweeted

Para vosotros programadores!

Nicolás Catalogna retweeted

Exciting big Kimi K2 Thinking release!

More experts, fewer heads, and even more thinking!

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Nicolás Catalogna retweeted

Integrating 𝗔𝗴𝗲𝗻𝘁𝗶𝗰 𝗥𝗔𝗚 Systems via 𝗠𝗖𝗣 👇

If you are building RAG systems and packing many data sources for retrieval, most likely there is some agency present at least at the data source selection for retrieval stage.

This is how MCP enriches the evolution of your Agentic RAG systems in such case (𝘱𝘰𝘪𝘯𝘵 2.):

𝟭. Analysis of the user query: we pass the original user query to a LLM based Agent for analysis. This is where:

➡️ The original query can be rewritten, sometimes multiple times to create either a single or multiple queries to be passed down the pipeline.

➡️ The agent decides if additional data sources are required to answer the query.

𝟮. If additional data is required, the Retrieval step is triggered. We could tap into variety of data types, few examples:

➡️ Real time user data.

➡️ Internal documents that a user might be interested in.

➡️ Data available on the web.

➡️ …

𝗧𝗵𝗶𝘀 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗠𝗖𝗣 𝗰𝗼𝗺𝗲𝘀 𝗶𝗻:

✅ Each data domain can manage their own MCP Servers. Exposing specific rules of how the data should be used.

✅ Security and compliance can be ensured on the Servel level for each domain.

✅ New data domains can be easily added to the MCP server pool in a standardised way with no Agent rewrite needed enabling decoupled evolution of the system in terms of 𝗣𝗿𝗼𝗰𝗲𝗱𝘂𝗿𝗮𝗹, 𝗘𝗽𝗶𝘀𝗼𝗱𝗶𝗰 𝗮𝗻𝗱 𝗦𝗲𝗺𝗮𝗻𝘁𝗶𝗰 𝗠𝗲𝗺𝗼𝗿𝘆.

✅ Platform builders can expose their data in a standardised way to external consumers. Enabling easy access to data on the web.

✅ AI Engineers can continue to focus on the topology of the Agent.

𝟯. Retrieved data is consolidated and Reranked by a more powerful model compared to regular embedder. Data points are significantly narrowed down.

𝟰. If there is no need for additional data, we try to compose the answer (or multiple answers or a set of actions) straight via an LLM.

𝟱. The answer gets analyzed, summarized and evaluated for correctness and relevance:

➡️ If the Agent decides that the answer is good enough, it gets returned to the user.

➡️ If the Agent decides that the answer needs improvement, we try to rewrite the user query and repeat the generation loop.

Are you using MCP in your Agentic RAG systems? Let me know about your experience in the comment section 👇

#LLM #AI #MachineLearning

Nicolás Catalogna retweeted

Con un ojo puesto en los resultados de la competición de Kaggle de ARC AGI 2. Fuera de deadline por aquí están reportando unos resultados sorprendentes a un coste por tarea bastante alucinante, 2 órdenes de magnitud por debajo del líder.

We improved our ARC AGI2 score to 29.72%, which is the new SOTA for the ARC AGI2.

This score is not legit for the arc prize competition because we got it after the deadline. It is legit for the ARC AGI leaderboard because the test data is the same.

Our solution costs $0.21% per task, when the previous SOTA of 29.40% cost $30 per task.

We'll disclose our method on Dec 5th, when the arc prize competition results are unveiled.

Nicolás Catalogna retweeted

Your starting point for uncovering how state-of-the-art reasoning models are trained at frontier labs. Keyword "starting point".

Nicolás Catalogna retweeted

Starlink directo al móvil se ma expandiendo por el mundo...

En mi opinión será una de las tecnologías más importantes de esta década.

Starlink Direct to Cell operates the largest satellite-to-mobile constellation in the world, delivering data, voice, video and messaging to mobile dead zones across 5 continents.

Since first activating service earlier this year, more than 8M people and counting have relied on Starlink Direct to Cell to stay connected when terrestrial service is unavailable → starlink.com/direct-to-cell

Nicolás Catalogna retweeted

A breakdown of 𝗗𝗮𝘁𝗮 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲𝘀 𝗶𝗻 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗦𝘆𝘀𝘁𝗲𝗺𝘀 👇 And yes, it can also be used for LLM based systems!

It is critical to ensure Data Quality and Integrity upstream of ML Training and Inference Pipelines, trying to do that in the downstream systems will cause unavoidable failure when working at scale.

There is a ton of work to be done on the Data Lake or LakeHouse layer. 𝗦𝗲𝗲 𝘁𝗵𝗲 𝗲𝘅𝗮𝗺𝗽𝗹𝗲 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲 𝗯𝗲𝗹𝗼𝘄.

𝘌𝘹𝘢𝘮𝘱𝘭𝘦 𝘢𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵𝘶𝘳𝘦 𝘧𝘰𝘳 𝘢 𝘱𝘳𝘰𝘥𝘶𝘤𝘵𝘪𝘰𝘯 𝘨𝘳𝘢𝘥𝘦 𝘦𝘯𝘥-𝘵𝘰-𝘦𝘯𝘥 𝘥𝘢𝘵𝘢 𝘧𝘭𝘰𝘸:

𝟭: Schema changes are implemented in version control, once approved - they are pushed to the Applications generating the Data, Databases holding the Data and a central Data Contract Registry.

Applications push generated Data to Kafka Topics:

𝟮: Events emitted directly by the Application Services.

👉 This also includes IoT Fleets and Website Activity Tracking.

𝟮.𝟭: Raw Data Topics for CDC streams.

𝟯: A Flink Application(s) consumes Data from Raw Data streams and validates it against schemas in the Contract Registry.

𝟰: Data that does not meet the contract is pushed to Dead Letter Topic.

𝟱: Data that meets the contract is pushed to Validated Data Topic.

𝟲: Data from the Validated Data Topic is pushed to object storage for additional Validation.

𝟳: On a schedule Data in the Object Storage is validated against additional SLAs in Data Contracts and is pushed to the Data Warehouse to be Transformed and Modeled for Analytical purposes.

𝟴: Modeled and Curated data is pushed to the Feature Store System for further Feature Engineering.

𝟴.𝟭: Real Time Features are ingested into the Feature Store directly from Validated Data Topic (5).

👉 Ensuring Data Quality here is complicated since checks against SLAs is hard to perform.

𝟵: High Quality Data is used in Machine Learning Training Pipelines.

𝟭𝟬: The same Data is used for Feature Serving in Inference.

Note: ML Systems are plagued by other Data related issues like Data and Concept Drifts. These are silent failures and while they can be monitored, we can’t include it in the Data Contract.

Let me know your thoughts! 👇

#LLM #AI #MachineLearning

Nicolás Catalogna retweeted

A mi parecer este es de los mejores vídeos que he visto de brazos robóticos operando en tiempo real con 100% de autonomía resolviendo la tarea con esa fluidez y movimientos tan limpios!

Esto proviene del nuevo modelo de control de robots de Generalist 🔥

nitter.net/GeneralistAI/sta…

New article! This one is half-paper highlight half essay. It's about a recent paper titled "ParaRNN" and it's implications. I think this paper is very important for what it *means* for architecture research, not so much the literal result. I also made and tested a reusable JAX function for anyone looking to experiment with this.

Importantly, it's the first time we've been able to smoothly trade-off parallel for true sequence-wise depth, without sacrificing MFU.

Before, we could only choose one or the other. Transformers, deltanets, transnormers, etc. are all parallelizable, but they don't have the ability to perform computations *across* the sequence length like LSTMs.

This limits their ability to scale in long context situations w/o sufficient layer depth to compensate which doesn't scale. In practice, long context understanding is somewhere half way between parallel computation and sequential synthesis.

This is something ill-captured by current architectures. Now, however, we can directly control the tradeoff between number of sequential synthesese and parallelism/effeciency. This article is covering that, as well as what else to take away from the paper.

Link in thread

Nicolás Catalogna retweeted

JSON vs TOON.

Is JSON really dead for LLM calls ?

JSON is universal and excellent for nested data. TOON, in contrast, is a token optimized alternative built only for flat, tabular data; it slashes token usage by up to 60% for that specific use case.

Here’s how it works under the hood:

>TOON analyzes an array of uniform objects (where all items have the same keys).

>It declares the field keys (id, name, age) only once in a header.

>It streams the array items like a CSV, using indentation for structure.

>This eliminates repeating keys, quotes, and braces for every single row.

>For nested or non uniform data, it falls back to a YAML like structure, losing its efficiency.

How It Differs from JSON:

>JSON's syntax (braces, brackets, quotes) is excellent for handling nested objects and complex APIs.

>TOON's syntax (indentation, headers, minimal quotes) is built for flat tables and LLM prompts.

>JSON has a mature, universal ecosystem.

>TOON has an emerging, LLM specific ecosystem.

>It is inefficient for deep nesting; JSON handles it perfectly.

Why it matters:

>Up to 60% fewer tokens for tabular data

>Reduced LLM API costs.

>Faster prompt processing.

>Fits more tabular data into the LLM context window.

When to use it:

>LLM prompts containing flat, tabular data.

>Uniform arrays of objects (like database results).

>Always flatten nested structures before converting for best results.

>When you need to send large, simple tables to an LLM.

And no, JSON is not dead for LLM calls.