Engineering Manager

Chicago, IL

Joined February 2009

- Tweets 25,798

- Following 4,889

- Followers 557

- Likes 24,080

Raghava Nellaturu retweeted

Carl Ludwig Siegel's genius is one of the best kept secret in mathematics, you might want to check out the publicly available book 'Transcendental Numbers' to see just why Andre Weil called him ''the greatest mathematician of the first half of the 20th century''.

You should also check out his 'Lectures on the Geometry of Numbers' and 'Sympletic Geometry'.

DS-STAR: Data Science Agent via Iterative Planning and Verification -

arxiv.org/pdf/2509.21825?s=0…

Raghava Nellaturu retweeted

My "The Building Blocks of Today’s and Tomorrow’s Language Models" talk at the PyTorch Conference is now up on YouTube! piped.video/watch?v=nDl6Aj9a…

If you have 25 min this weekend, it's a whirlwind tour to catch you up on the key LLM architecture design considerations in popular LLMs this year (plus, an overview of alternative architecture designs).

The silver lining of my late arrival and rescheduling: Since there was no talk after mine, it's followed by a 30 min Q&A instead of just the usual 5 min :)

Raghava Nellaturu retweeted

TIL: Claude Code local sandbox environment is open-source.

> native OS sandboxing primitives (sandbox-exec on macOS, bubblewrap on Linux) and proxy-based network filtering. It can be used to sandbox the behaviour of agents, local MCP servers, bash commands and arbitrary processes.

Raghava Nellaturu retweeted

I've been getting a lot of value using coding agents for code research tasks recently - I have a dedicated simonw/research GitHub repo and I frequently have them run detailed experiments and write up the results. Here's how I'm doing that + some examples:

simonwillison.net/2025/Nov/6…

Raghava Nellaturu retweeted

The fastest way to ship with AI isn't vibe coding. It's planning.

I built an AI image feature by spending 40 minutes NOT coding—just planning with specialized subagents that research, review, and question the spec. By the time I typed "/work," the implementation was already solved.

Full breakdown + compounding engineering philosophy: every.to/source-code/teach-y…

Raghava Nellaturu retweeted

absolute masterclass on coding with AI from @kieranklaassen today in @every

every.to/source-code/teach-y…

Raghava Nellaturu retweeted

Exciting big Kimi K2 Thinking release!

More experts, fewer heads, and even more thinking!

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

TypeScript’s rise in the AI era: Insights from Lead Architect, Anders Hejlsberg - The GitHub Blog share.google/cKuiSgzzzEqlRTe…

Raghava Nellaturu retweeted

Today, we published a paper, “AI and the Future of Learning.” The paper outlines the opportunity of AI to reduce barriers to education and unlock human potential. It also addresses key questions like cognitive decline and cheating, and the need to build tools grounded in learning science.

Raghava Nellaturu retweeted

🏮Async QUIC and HTTP/3 made easy: tokio-quiche is now open-source

> Cloudflare's battle-tested

> asynchronous QUIC library combining both quiche and the Rust Tokio async runtime

> tokio-quiche handles millions of HTTP/3 requests per second with low latency and high throughput

blog.cloudflare.com/async-qu…

Raghava Nellaturu retweeted

On-policy distillation is powerful, but @thinkymachines's tinker only supports distilling from a teacher model within the same family, making it impossible for qwen to learn from deepseek, gpt-oss, etc.

For the first time, we enabled model-agnostic distillations natively using the tinker api, implemented in our open-source distillation framework spider. The framework now auto-detects differences in model naming and routes to cross-tokenizer KL computation in the training loop.

We follow the same algorithm that @huggingface implements in TRL for on-policy distillation, ie. matching token groups by student and teacher many-to-many and computing joint probs for each group.

With spider and tinker, model-agnostic distillation can be done in a GPU-free, fully server-side manner, which can empower faster and more budget-friendly iterations of experimentations.

With this feature enabled, we will launch distillation runs from a suite of different teacher models, and study how they influence the training dynamics and downstream performances differently. Should be a ton of fun!

Raghava Nellaturu retweeted

We reproduce deepseek-ocr training from scratch, the code, model, results can be found in our website #DeepSeek

pkulium.github.io/DeepOCR_we…

Raghava Nellaturu retweeted

Can’t believe it — our Princeton AI^2 postdoc Shilong Liu @atasteoff re-built DeepSeek-OCR from scratch in just two weeks 😳 — and open-sourced it. This is how research should be done 🙌 #AI #LLM #DeepSeek #MachineLearning #Princeton @omarsar0 @PrincetonAInews @akshay_pachaar

Discover DeepOCR: a fully open-source reproduction of DeepSeek-OCR, complete with training & evaluation code! #DeepLearning #OCR

Raghava Nellaturu retweeted

This aligns perfectly with what Anthropic just published about code execution with MCP

Link: anthropic.com/engineering/co…

In Claude Code, I've noticed Claude prefers writing and executing code directly in bash rather than using tool calls.

I've seen Python scripts appear, execute, and disappear in a single run, Claude knows it needs to minimize token usage and work as efficiently as possible.

Lately I’ve been testing both CLI and MCP in Claude Code... and honestly, the CLI wins

I list all my installed tools (vercel, supabase, gh, etc.) in CLAUDE .md and let Claude use them to check logs, run queries, or tweak configs.

Setup is simpler, auth is native, and observability is crystal clear.

There’s still debate on CLI vs MCP, but in my workflow, CLI is miles ahead

Raghava Nellaturu retweeted

I started to learn Lean a few months ago. Now finally complete my first Lean project! With about 10K lines of code, I formalized the a.s. convergence of Q learning and linear TD. Hope AI can formalize more RL theory in the future! github.com/ShangtongZhang/rl…

Raghava Nellaturu retweeted

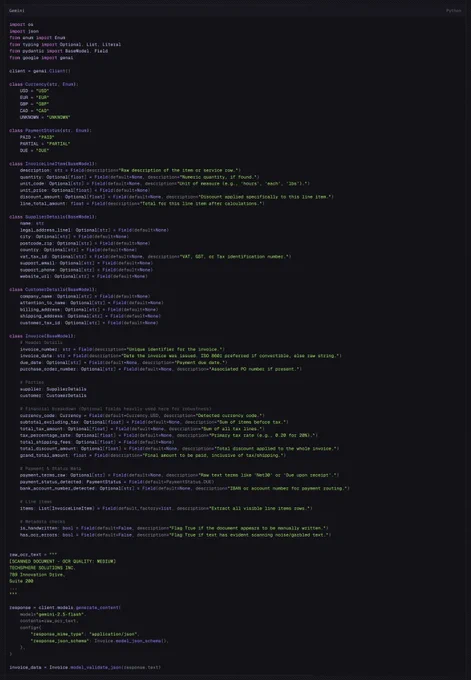

Here is an example of using a deeply nested Pydantic Schema with +30 fields, Union, Optionals and recursive structures with the new Sturctured Structured Outputs in the Gemini API! 💥

Note: To enable this you need to use the new `response_json_schema` parameter instead of the old `response_schema`. Documentation and Snippet below.