Joined November 2022

- Tweets 9,431

- Following 1,381

- Followers 401

- Likes 10,572

Sabry Akram retweeted

robotics was once said to lag 2-3 years behind AI due to hardware and sensor limitations

but the gap has narrowed fast.

today's robots can walk, run, handle complex objects, and perform complex tasks that were limited to labs

what's missing now is the "brain"

a general AI capable of understanding goals, making decisions, and adapting on its own

Sabry Akram retweeted

This makes perfect sense once one thinks about incentives:

Binance wants BNB chain to eclipse ETH because CZ thinks he can be the next Ethereum

Tether wants BTC to dominate ETH as they hodl a lot of BTC from the early days of Bitfinex

Goldman and JP Morgan have no invested chain and are being replaced by Crypto payment rails, so they need to buy ETH, the most decentralized, neutral and reliable blockchain from clients' perspective

------

"Show me the incentive, and I will show you the outcome." - Charlie Munger

------

In the end, both BNB and BTC are going to lose to ETH and DeFi because the core property of ETH is incorruptibility

“Wall Street doesn’t care about speed, they want $ETH.”- Tom Lee

Sabry Akram retweeted

With the rate AI/robots capabilities are accelerating, it’s hard to imagine this trend will reverse and we’ll somehow start creating tens of millions of new jobs(for people), no matter what new industries emerge.

We’re automating intelligence itself, and it will eventually be able to do everything humans can do.

This technological revolution is fundamentally different from all previous ones.

Grok 4 Fast has a massive 2M token context window that unlocks enterprise-scale reasoning

It easily remembers ultra-long chats, huge codebases, or hundreds of pages in a single go

The best part? xAI just updated it with massive performance gains:

Reasoning mode: 77.5% ➝ 94.1%

Non- Reasoning: 77.9% ➝ 97.9%

Grok 4 Fast delivers near-flagship results with up to 98% lower cost

Sabry Akram retweeted

The top immunologist @DeryaTR_ just said the part everyone has been whispering; not even Grok Heavy or Gemini DeepThink comes close to GPT-5 Pro. And the wild thing is that this actually fits what people inside the ecosystem have been hinting at for months.

Here is the uncomfortable truth. OpenAI is running internal models that are far more capable than anything public right now. The gap is real. The other labs are sprinting. OpenAI is leading.

You can see it in the pattern. Sudden jumps in reasoning. Tool use that feels coordinated. Context windows that swallow entire bookshelves. Multimodal abilities that move from cute to genuinely useful. None of this happens without a deeper tier under the surface.

Most serious estimates say OpenAI is roughly six months ahead. In this acceleration curve that is basically a full era.

If every frontier lab really launches new models next month, this winter is going to be the loudest release season in tech history.

Feels like we are living in the beta version of the future.

Sabry Akram retweeted

Bearish for the AI bubble:

- Chinese tech firms can train frontier models pretty cheaply

- They shoot straight to the top of the leaderboards, hugging face downloads, are open source

- Get incorporated in wrappers like Perplexity

Shows US foundational models have no moat

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

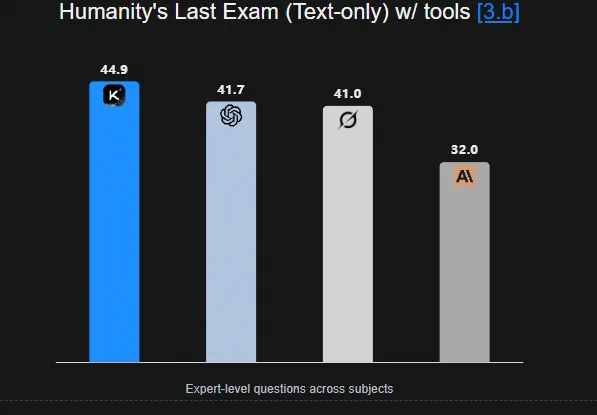

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Sabry Akram retweeted

In a year or so this level of performance should be available on a 32b dense model (K2 is 32b active) at a cost of < $0.2/million tokens

I don't think folk have that in their estimates

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Sabry Akram retweeted

another solid breakthrough from Google...

they're introducing nested learning:

"a new ML paradigm for continual learning"

'Hope' is a self-updating, long-context memory architecture that generalizes 'Titans' beyond two update levels, allowing continual learning without forgetting

too early to say, but it seems a real step toward fixing the memory problem

Sabry Akram retweeted

Introducing Nested Learning: A new ML paradigm for continual learning that views models as nested optimization problems to enhance long context processing. Our proof-of-concept model, Hope, shows improved performance in language modeling. Learn more: goo.gle/47LJrzI

@GoogleAI

Sabry Akram retweeted

jensen huang is right

china will likely win the AI race.

they're releasing open-source models to weaken U.S. funding, while catching up in compute and next-gen models

they already dominate in robotics

and if AI levels out, they'll win through hardware strength

Sabry Akram retweeted

AI is eating all the jobs.

Job postings fell 37% from the April 2022 peak, landing back at February 2021 levels. The entire pandemic hiring boom got deleted in thirty months while everyone argued about return-to-office policies.

They laid off 300,000 tech workers over three years. Then AI made sure those jobs never came back.

Stack Overflow cut 28% of staff because Copilot does what junior developers used to do. Duolingo stopped hiring contractors for work AI handles now. IBM froze 7,800 positions to automate instead.

Software engineer postings down 49%. Web developers down 60%.

Every company runs the same filter now before approving new headcount: "Can AI do this?" Just asking the question freezes hiring for months while they evaluate.

New postings are only 4% above pre-pandemic levels even though millions more workers entered the labor force. Do the math.

The BLS data is delayed from the government shutdown. When it drops, it will confirm what Indeed has been screaming. They fired the workforce, then AI replaced the hiring pipeline itself.

You keep waiting for the AI job apocalypse to arrive. You already lived through it and didn't notice because unemployment stayed at 4.1%.

BREAKING: Job postings on Indeed fell -6.4% YoY in the week ending October 31st, now at the lowest level since February 2021.

Postings have now declined -36.9% since the April 2022 peak.

The number of available vacancies is now only +1.7% above pre-pandemic levels seen in February 2020.

Furthermore, new job postings are just +4.1% above those levels.

This signals further deterioration ahead in BLS job openings data, which has been delayed due to the government shutdown.

Labor market weakness is spreading.

Sabry Akram retweeted

A few thoughts on the Kimi-K2-thinking release

1) Open source is catching up and in some cases surpassing closed source.

2) More surprising: the rise of open source isn’t coming from the U.S., as was still expected at the end of last year (LLama), but from China—kicked off in early 2025 with DeepSeek, and now confirmed by Kimi.

3)The sanctions against China aren’t achieving their intended effect; on the contrary, the chip restrictions are pushing Chinese scientists to become far more creative, essentially turning necessity into a virtue.

4)Taken together, this leads to the conclusion that China is not just becoming an increasingly strong competitor to the U.S., but is now catching up—and it’s no longer clear who will win the race toward AGI.

Sabry Akram retweeted

At a FDV of $1.26 billion, when ZKsync generates $120 million in fees per year, they will have a ridiculously low PE of 10

With a base 15,000 TPS per Elastic Chain instance, that appears highly achievable by creating Private L2s for Deutsche Bank, Abu Dhabi banks etc and running composable Perp DEXs

All those interconnected chains will generate interop fees for ZKsync

There's no limit to the number of parallel Elastic Chains that can be created- it's unlimited parallelism

Few

Sabry Akram retweeted

Is DeepSeek still a startup?

Technical validation gave it strategic immunity in China’s AI ecosystem. The company can now focus on pushing architectural boundaries instead of chasing market returns.

This only works in China. A distinctly Chinese evolution.

Grok upgrades

New Grok upgrade:

-grok-4-fast-reasoning: 77.5% -> 94.1%

-grok-4-fast-non-reasoning: 77.9 -> 97.9%

My tests show significant ability increases in reasoning for potential agentic work.

The fastest improving AI model in history…

(Via @xlr8harder)