AI-powered Decentralized Exchange. Unifying fragmented liquidity across chains with intelligent routing, non-custodial security, and high-performance execution.

Joined August 2025

- Tweets 52

- Following 28

- Followers 17

- Likes 7

AI doesn't just automate. It finds the signal in the noise. 🧠

Tacen's AI acts as your risk manager, proactively scanning for volatility, liquidation risk, and whale behavior before you trade. It's security and intelligence, built into one platform.

TacenApp retweeted

📢 MASSIVE: This new paper proved GPT-5 (medium) now far exceeds (>20%) pre-licensed human experts on medical reasoning and understanding benchmarks.

GPT-5 beats human experts on MedXpertQA multimodal by 24.23% in reasoning and 29.40% in understanding, and on MedXpertQA text by 15.22% in reasoning and 9.40% in understanding. 🔥

It compares GPT-5 to actual professionals in good standing and claims AI is ahead.

GPT-5 is tested as a single, generalist system for medical question answering and visual question answering, using one simple, zero-shot chain of thought setup.

⚙️ The Core Concepts

The paper positions GPT-5 as a generalist multimodal reasoner for decision support, meaning it reads clinical text, looks at images, and reasons step by step under the same setup.

The evaluation uses a unified protocol, so prompts, splits, and scoring are standardized to isolate model improvements rather than prompt tricks.

---

My take: The medical sector takes one of the biggest share of national budgets across the globe, even in the USA, where it surpasses military spending.

Once AI or robots can bring down costs, governments everywhere will quickly adopt them because it’s like gaining extra funds without sparking political controversy.

🧬 Bad news for medical LLMs.

This paper finds that top medical AI models often match patterns instead of truly reasoning.

Small wording tweaks cut accuracy by up to 38% on validated questions.

The team took 100 MedQA questions, replaced the correct choice with None of the other answers, then kept the 68 items where a clinician confirmed that switch as correct.

If a model truly reasons, it should still reach the same clinical decision despite that label swap.

They asked each model to explain its steps before answering and compared accuracy on the original versus modified items.

All 6 models dropped on the NOTA set, the biggest hit was 38%, and even the reasoning models slipped.

That pattern points to shortcut learning, the systems latch onto answer templates rather than working through the clinical logic.

Overall, the results show that high benchmark scores can mask a robustness gap, because small format shifts expose shallow pattern use rather than clinical reasoning.

This is the real difference: using AI vs shaping AI 👇

In a market full of risks, your best defense is intelligence 🧠⚡

#Tacen AI = your personal risk manager 🤖

📉 Predicts volatility

⚠️ Flags liquidation risk

🐋 Spots whale moves

Smart #security, powered by #AI 🔒🚀 #RiskManagement #DeFi

Custodial exchanges are a primary target for hackers. The solution? Remove the target. 🎯

A hybrid model reduces custodial attack vectors by design. Your funds are never held by the exchange, making the platform safer and more resilient.

#Tacen #Hybrid #Security #NonCustodial

TacenApp retweeted

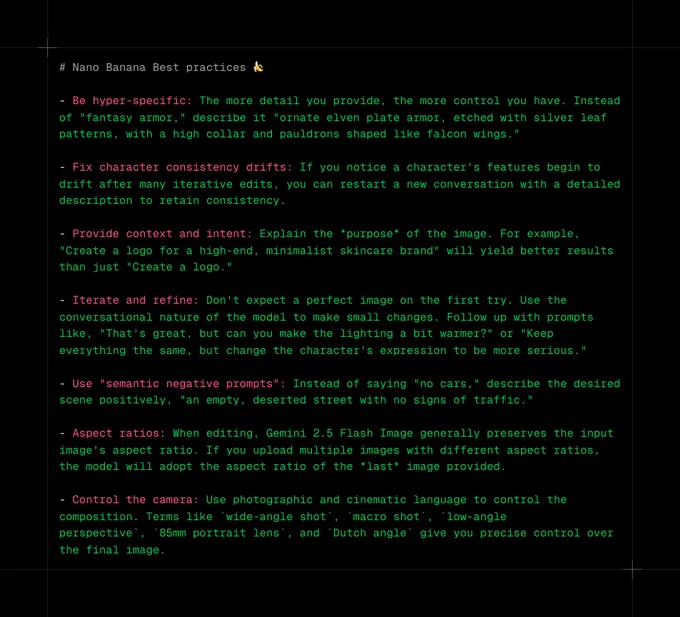

Gemini 2.5 Flash Image (Nano Banana) best practices 🍌🍌🍌

- Be hyper-specific: The more detail you provide, the more control you have. Instead of "fantasy armor," describe it "ornate elven plate armor, etched with silver leaf patterns, with a high collar and pauldrons shaped like falcon wings."

- Fix character consistency drifts: If you notice a character's features begin to drift after many iterative edits, you can restart a new conversation with a detailed description to retain consistency.

- Provide context and intent: Explain the *purpose* of the image. For example, "Create a logo for a high-end, minimalist skincare brand" will yield better results than just "Create a logo."

- Iterate and refine: Don't expect a perfect image on the first try. Use the conversational nature of the model to make small changes. Follow up with prompts like, "That's great, but can you make the lighting a bit warmer?" or "Keep everything the same, but change the character's expression to be more serious."

- Use "semantic negative prompts": Instead of saying "no cars," describe the desired scene positively, "an empty, deserted street with no signs of traffic."

- Aspect ratios: When editing, Gemini 2.5 Flash Image generally preserves the input image's aspect ratio. If you upload multiple images with different aspect ratios, the model will adopt the aspect ratio of the *last* image provided.

- Control the camera: Use photographic and cinematic language to control the composition. Terms like `wide-angle shot`, `macro shot`, `low-angle perspective`, `85mm portrait lens`, and `Dutch angle` give you precise control over the final image.

In a volatile market, data is your compass 🧭📊

#Tacen integrates 30+ sources 🌐 (on-chain, prices, social) → turning raw data into actionable signals ⚡

Smarter insights. Better decisions 🚀

#DataScience #Trading #marketintelligence

The path to mass adoption is trust and transparency. At Tacen, your assets stay in your control while we provide a regulated platform—the safer bridge to a better digital asset ecosystem. 🌉

#DeFi #Regulation #Blockchain

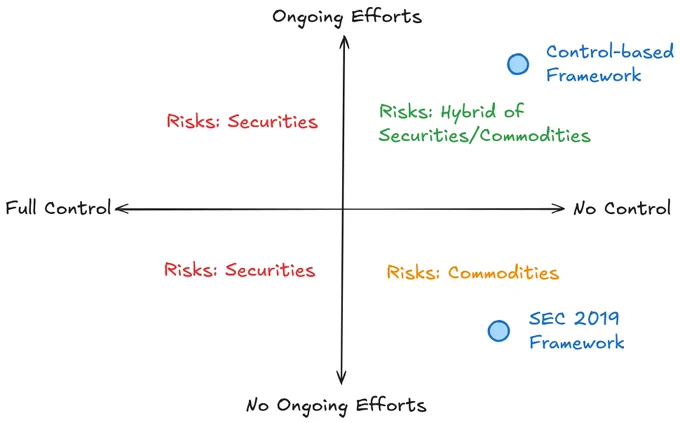

The SEC’s decentralization framework has been corrupted and used to persecute builders —incentivizing rug pulls over value creation.

It’s time to fix it.

Decentralization should mean the absence of control, not the suppression of ongoing efforts.

Here’s how and why👇

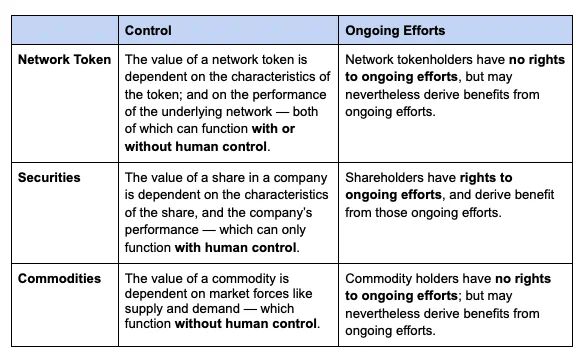

Network tokens — tokens that derive substantial value from the operation of a blockchain (eg BTC, ETH, SOL) — can have embedded trust dependencies that result in information asymmetries.

Where those trust dependencies are minimized, network tokens look like commodities with low information asymmetry risk.

Where those trust dependencies are significant, network tokens can look like securities with high information asymmetry risk — supporting the use of disclosures requirements under securities laws.

The two primary trust dependencies relating to network tokens are: (1) control and (2) ongoing efforts.

The SEC’s 2019 Decentralization Framework sought to eliminate the risk of information asymmetries by defining decentralization to mean the absence of BOTH control and ongoing efforts. Their reasoning was clear — where network tokens were “sufficiently decentralized” tokens functioned more like commodities.

But this is the wrong approach!

It’s created significant perverse incentives — builders can actually reduce their regulatory risk by abandoning their projects or by masking their ongoing efforts (“decentralization theater”).

Builders HATE this conception of decentralization.

We need to incentivize builders, not hinder them. A better two-pronged approach can achieve this:

1⃣Decentralization should be redefined as “the absence of control.” This eliminates significant risk and preserves one of the core features of blockchains – they can function without human control.

2⃣A targeted and light touch disclosure framework can be used to eliminate information asymmetries that might arise from ongoing efforts.

Collectively, this approach would foster innovation and protect users – ensuring decentralization empowers builders rather than being weaponized against them.

Full article linked below.

TacenApp retweeted

🚨BREAKING: Vibe coding just got Massive upgrade

Lindy AI just dropped, and it can automate everything like it’s nothing.

9 Wild examples you don’t want to miss:

What separates professional traders from everyone else?

Not instinct — it’s intelligence + technology 🧠⚡

🤖 AI closes the gap → predictive insights 📊 + automated execution ⚙️

Simplicity isn’t a shortcut.

It's the new standard for success 🚀✅ #TACEN #DEX #AiTrading

GM, Web3 fam! ☕

The sun is up, and a new week of building is here. Web3 isn't just a vision—it's the foundation of a more open, transparent, and user-owned internet. Let's keep building the future, one block at a time. 🚀

TacenApp retweeted

OpenAI Agents SDK crash course covering AI Agent orchestration, tool use, structured outputs, multi-agent workflows and voice agents.

Learn AI Agent concepts from basics to advanced with step-by-step code tutorials.

100% free and Opensource.

In a market full of noise, we choose to focus on what matters.

As CZ once said, the best marketing is a great product. 🚀

#Tacen's vision is built not on words, but on our AI engine, hybrid architecture,

and non-custodial security. We're letting the product speak for itself.

🔥ICYMI: CZ at #BNBDay Tokyo:

“If I were 20 years younger, I’d build...

▪️AI trading agent

▪️Privacy-focused perp DEX

DEX volumes could surpass CEX.🚀

Next big breakthroughs? Blockchain x AI, RWA, and stablecoins.”

AI isn't just about automation. It's finding the signal in the noise of a volatile market, and making data-driven decisions. AI is the co-pilot that cuts through the chaos. It identifies key trends, analyzes sentiment, and provides the clarity you need to trade with confidence.

TacenApp retweeted

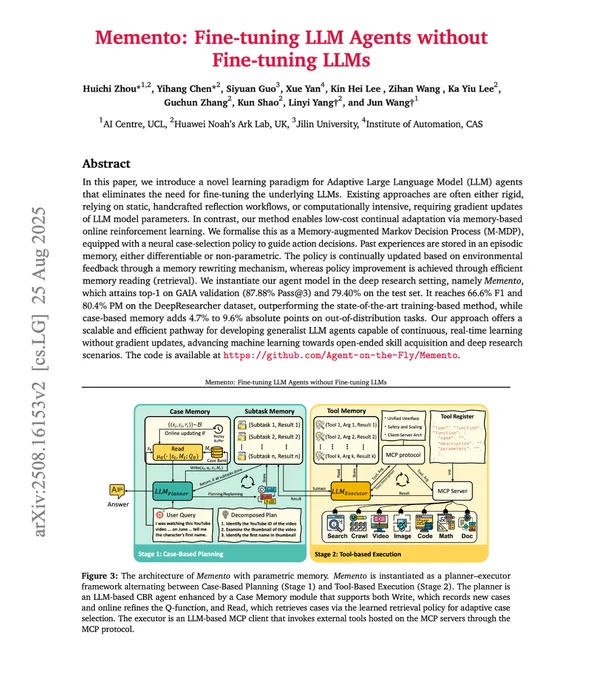

Fine-tuning LLM Agents without Fine-tuning LLMs!

Imagine improving your AI agent's performance from experience without ever touching the model weights.

It's just like how humans remember past episodes and learn from them.

That's precisely what Memento does.

The core concept:

Instead of updating LLM weights, Memento learns from experiences using memory.

It reframes continual learning as memory-based online reinforcement learning over a memory-augmented MDP.

Think of it as giving your agent a notebook to remember what worked and what didn't!

How does it work?

The system breaks down into two key components:

1️⃣ Case-Based Reasoning (CBR) at work:

Decomposes complex tasks into sub-tasks and retrieves relevant past experiences.

No gradients needed, just smart memory retrieval!

2️⃣ Executor

Executes each subtask using MCP tools and records outcomes in memory for future reference.

Through MCP, the executor can accomplish most real-world tasks & has access to the following tools:

🔍 Web research

📄 Document handling

🐍 Safe Python execution

📊 Data analysis

🎥 Media processing

I found this to be a really good path toward building human-like agents.

👉 Over to you, what are your thoughts?

I have shared the relevant links in next tweet!

_____

Share this with your network if you found this insightful ♻️

Find me → @akshay_pachaar for more insights and tutorials on AI and Machine Learning!

Imagine a map where every #blockchain is an isolated island 🏝️

#Crosschain liquidity is the bridge 🌉 that connects them all.

It unifies fragmented markets 🔗 and brings the best opportunities 📊✨ right to your fingertips 🤲

🌐 Welcome to the interconnected world of #Web3 🚀